Listing business on Google"Listing a business on Google involves creating a Google My Business profile, verifying the location, and optimizing the listing with accurate information, photos, and customer reviews. This helps improve local visibility, attract more customers, and build a stronger online reputation."

local business citations"Local business citations are online mentions of a companys name, address, and phone number. By ensuring consistency and accuracy across directories, businesses can improve local search visibility, strengthen their local reputation, and attract more nearby customers."

local intent keywordsLocal intent keywords include phrases that indicate a users search for nearby products or services.

Local link building"Local link building focuses on acquiring backlinks from businesses, organizations, and directories within your geographic area. By building local connections, you improve visibility in local search results and strengthen your sites authority in the region."

Local SEO"Local SEO focuses on optimizing a businesss online presence to attract customers in a specific geographic area. By targeting location-based keywords, improving local citations, and managing online reviews, businesses can dominate local search results and build a stronger community presence."

Local SEO agency"A local SEO agency specializes in helping businesses improve their visibility in region-specific searches. Best Search Engine Optimisation Services. By focusing on location-based keywords, optimizing Google My Business listings, and building local citations, these agencies connect businesses with nearby customers and enhance their community presence."

Local SEO Australia"Local SEO services in Australia focus on improving a businesss online presence in a specific region. SEO Audit . By targeting location-based keywords, optimizing local directories, and managing reviews, these services help businesses connect with nearby customers and increase foot traffic."

Local SEO services"Local SEO services optimize a businesss online presence within a specific region. By targeting location-based keywords, managing directory listings, and creating geo-targeted content, these services connect businesses with nearby customers and help them dominate local search results."

Local SEO services Sydney"Local SEO services in Sydney focus on optimizing a businesss digital presence within a specific region. These services include local keyword research, Google My Business management, and geo-targeted content strategies, all aimed at helping businesses connect with nearby customers and enhance their local reputation."

Local SEO specialists"Local SEO specialists focus on optimizing a businesss online presence within a specific region. By targeting local keywords, managing directory listings, and creating location-specific content, these specialists help businesses attract more local customers and improve their community reputation."

Local SEO Sydney"Local SEO services in Sydney focus on optimizing a businesss online presence to attract customers in a specific geographical area. By leveraging strategies such as Google My Business optimization, local keyword targeting, and local link building, businesses can dominate local search results, increase foot traffic, and build strong community connections."

Local SEO Sydney"Local SEO in Sydney targets geographically relevant search terms to connect businesses with nearby customers. comprehensive SEO Packages Sydney services. By optimizing local directories, managing online reviews, and creating location-specific content, these strategies increase visibility and attract more foot traffic to brick-and-mortar stores."

long-form content keywordsLong-form content keywords support in-depth articles that thoroughly address a topic. These keywords help you capture search traffic from users seeking detailed information and enhance your contents authority.

long-form content optimization"Long-form content optimization involves refining detailed, in-depth articles to improve search visibility and user engagement. By incorporating relevant keywords, structuring content clearly, and adding multimedia elements, businesses can rank higher and provide more value to readers."

long-tail keywords"Long-tail keywords are more specific, less competitive search terms that often have higher conversion rates. range of SEO Services and Australia . By targeting these keywords, businesses can reach a more focused audience, improve rankings, and attract highly qualified traffic."

long-tail keywords"Long-tail keywords are more specific, less competitive phrases that often yield higher conversion rates. These terms attract a more targeted audience, making it easier to rank well and generate quality traffic."

low-competition keywordsLow-competition keywords are easier to rank for because fewer websites target them.

low-competition long-tail keywordsLow-competition long-tail keywords are detailed phrases that are easier to rank for due to limited competition. These keywords help you gain visibility and attract targeted traffic without extensive SEO resources.

LSI keywords"Latent Semantic Indexing (LSI) keywords are closely related terms that help search engines understand context.

market-specific keywordsMarket-specific keywords focus on the unique terms used within a particular industry. Targeting these keywords helps you appeal directly to your niche audience and improve relevancy.

meta description enhancement"Improving meta descriptions makes them more descriptive, engaging, and keyword-rich. A well-crafted meta description helps attract clicks, provides a clear summary of the content, and signals relevance to search engines."

|

|

|

Screenshot

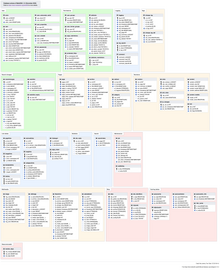

The Main Page of the English Wikipedia running an alpha version of MediaWiki 1.40

|

|

| Original author(s) | |

|---|---|

| Developer(s) | Wikimedia Foundation |

| Initial release | January 25, 2002 |

| Stable release |

1.43.0[1]

|

| Repository | |

| Written in | PHP[2] |

| Operating system | Windows, macOS, Linux, FreeBSD, OpenBSD, Solaris |

| Size | 79.05 MiB (compressed) |

| Available in | 459[3] languages |

| Type | Wiki software |

| License | GPLv2+[4] |

| Website | mediawiki |

MediaWiki is free and open-source wiki software originally developed by Magnus Manske for use on Wikipedia on January 25, 2002, and further improved by Lee Daniel Crocker,[5][6] after which development has been coordinated by the Wikimedia Foundation. It powers several wiki hosting websites across the Internet, as well as most websites hosted by the Wikimedia Foundation including Wikipedia, Wiktionary, Wikimedia Commons, Wikiquote, Meta-Wiki and Wikidata, which define a large part of the set requirements for the software.[7] Besides its usage on Wikimedia sites, MediaWiki has been used as a knowledge management and content management system on websites such as Fandom, wikiHow and major internal installations like Intellipedia and Diplopedia.

MediaWiki is written in the PHP programming language and stores all text content into a database. The software is optimized to efficiently handle large projects, which can have terabytes of content and hundreds of thousands of views per second.[7][8] Because Wikipedia is one of the world's largest and most visited websites, achieving scalability through multiple layers of caching and database replication has been a major concern for developers. Another major aspect of MediaWiki is its internationalization; its interface is available in more than 400 languages.[9] The software has hundreds of configuration settings[10] and more than 1,000 extensions available for enabling various features to be added or changed.[11]

MediaWiki provides a rich core feature set and a mechanism to attach extensions to provide additional functionality.

Due to the strong emphasis on multilingualism in the Wikimedia projects, internationalization and localization has received significant attention by developers. The user interface has been fully or partially translated into more than 400 languages on translatewiki.net,[9] and can be further customized by site administrators (the entire interface is editable through the wiki).

Several extensions, most notably those collected in the MediaWiki Language Extension Bundle, are designed to further enhance the multilingualism and internationalization of MediaWiki.

Installation of MediaWiki requires that the user have administrative privileges on a server running both PHP and a compatible type of SQL database. Some users find that setting up a virtual host is helpful if the majority of one's site runs under a framework (such as Zope or Ruby on Rails) that is largely incompatible with MediaWiki.[12] Cloud hosting can eliminate the need to deploy a new server.[13]

An installation PHP script is accessed via a web browser to initialize the wiki's settings. It prompts the user for a minimal set of required parameters, leaving further changes, such as enabling uploads,[14] adding a site logo,[15] and installing extensions, to be made by modifying configuration settings contained in a file called LocalSettings.php.[16] Some aspects of MediaWiki can be configured through special pages or by editing certain pages; for instance, abuse filters can be configured through a special page,[17] and certain gadgets can be added by creating JavaScript pages in the MediaWiki namespace.[18] The MediaWiki community publishes a comprehensive installation guide.[19]

One of the earliest differences between MediaWiki (and its predecessor, UseModWiki) and other wiki engines was the use of "free links" instead of CamelCase. When MediaWiki was created, it was typical for wikis to require text like "WorldWideWeb" to create a link to a page about the World Wide Web; links in MediaWiki, on the other hand, are created by surrounding words with double square brackets, and any spaces between them are left intact, e.g. [[World Wide Web]]. This change was logical for the purpose of creating an encyclopedia, where accuracy in titles is important.

MediaWiki uses an extensible[20] lightweight wiki markup designed to be easier to use and learn than HTML. Tools exist for converting content such as tables between MediaWiki markup and HTML.[21] Efforts have been made to create a MediaWiki markup spec, but a consensus seems to have been reached that Wikicode requires context-sensitive grammar rules.[22][23] The following side-by-side comparison illustrates the differences between wiki markup and HTML:

| MediaWiki syntax (the "behind the scenes" code used to add formatting to text) |

HTML equivalent (another type of "behind the scenes" code used to add formatting to text) |

Rendered output (seen onscreen by a site viewer) |

|---|---|---|

====A dialogue====

"Take some more [[tea]]," the March Hare said to Alice, very earnestly.

"I've had nothing yet," Alice replied in an offended tone: "so I can't take more."

"You mean you can't take ''less''," said the Hatter: "it's '''very''' easy to take ''more'' than nothing."

|

<h4>A dialogue</h4>

<p>"Take some more <a href="/wiki/Tea" title="Tea">tea</a>," the March Hare said to Alice, very earnestly.</p> <br>

<p>"I've had nothing yet," Alice replied in an offended tone: "so I can't take more."</p> <br>

<p>"You mean you can't take <i>less</i>," said the Hatter: "it's <b>very</b> easy to take <i>more</i> than nothing."</p>

|

A dialogue

"Take some more tea," the March Hare said to Alice, very earnestly. "I've had nothing yet," Alice replied in an offended tone: "so I can't take more." "You mean you can't take less," said the Hatter: "it's very easy to take more than nothing." |

(Quotation above from Alice's Adventures in Wonderland by Lewis Carroll)

MediaWiki's default page-editing tools have been described as somewhat challenging to learn.[24] A survey of students assigned to use a MediaWiki-based wiki found that when they were asked an open question about main problems with the wiki, 24% cited technical problems with formatting, e.g. "Couldn't figure out how to get an image in. Can't figure out how to show a link with words; it inserts a number."[25]

To make editing long pages easier, MediaWiki allows the editing of a subsection of a page (as identified by its header). A registered user can also indicate whether or not an edit is minor. Correcting spelling, grammar or punctuation are examples of minor edits, whereas adding paragraphs of new text is an example of a non-minor edit.

Sometimes while one user is editing, a second user saves an edit to the same part of the page. Then, when the first user attempts to save the page, an edit conflict occurs. The second user is then given an opportunity to merge their content into the page as it now exists following the first user's page save.

MediaWiki's user interface has been localized in many different languages. A language for the wiki content itself can also be set, to be sent in the "Content-Language" HTTP header and "lang" HTML attribute.

VisualEditor has its own integrated wikitext editing interface known as 2017 wikitext editor, the older editing interface is known as 2010 wikitext editor.

MediaWiki has an extensible web API (application programming interface) that provides direct, high-level access to the data contained in the MediaWiki databases. Client programs can use the API to log in, get data, and post changes. The API supports thin web-based JavaScript clients and end-user applications (such as vandal-fighting tools). The API can be accessed by the backend of another web site.[26] An extensive Python bot library, Pywikibot,[27] and a popular semi-automated tool called AutoWikiBrowser, also interface with the API.[28] The API is accessed via URLs such as https://en.wikipedia.org/w/api.php?action=query&list=recentchanges. In this case, the query would be asking Wikipedia for information relating to the last 10 edits to the site. One of the perceived advantages of the API is its language independence; it listens for HTTP connections from clients and can send a response in a variety of formats, such as XML, serialized PHP, or JSON.[29] Client code has been developed to provide layers of abstraction to the API.[30]

Among the features of MediaWiki to assist in tracking edits is a Recent Changes feature that provides a list of recent edits to the wiki. This list contains basic information about those edits such as the editing user, the edit summary, the page edited, as well as any tags (e.g. "possible vandalism")[31] added by customizable abuse filters and other extensions to aid in combating unhelpful edits.[32] On more active wikis, so many edits occur that it is hard to track Recent Changes manually. Anti-vandal software, including user-assisted tools,[33] is sometimes employed on such wikis to process Recent Changes items. Server load can be reduced by sending a continuous feed of Recent Changes to an IRC channel that these tools can monitor, eliminating their need to send requests for a refreshed Recent Changes feed to the API.[34][35]

Another important tool is watchlisting. Each logged-in user has a watchlist to which the user can add whatever pages he or she wishes. When an edit is made to one of those pages, a summary of that edit appears on the watchlist the next time it is refreshed.[36] As with the recent changes page, recent edits that appear on the watchlist contain clickable links for easy review of the article history and specific changes made.

There is also the capability to review all edits made by any particular user. In this way, if an edit is identified as problematic, it is possible to check the user's other edits for issues.

MediaWiki allows one to link to specific versions of articles. This has been useful to the scientific community, in that expert peer reviewers could analyse articles, improve them and provide links to the trusted version of that article.[37]

Navigation through the wiki is largely through internal wikilinks. MediaWiki's wikilinks implement page existence detection, in which a link is colored blue if the target page exists on the local wiki and red if it does not. If a user clicks on a red link, they are prompted to create an article with that title. Page existence detection makes it practical for users to create "wikified" articles—that is, articles containing links to other pertinent subjects—without those other articles being yet in existence.

Interwiki links function much the same way as namespaces. A set of interwiki prefixes can be configured to cause, for instance, a page title of wikiquote:Jimbo Wales to direct the user to the Jimbo Wales article on Wikiquote.[38] Unlike internal wikilinks, interwiki links lack page existence detection functionality, and accordingly there is no way to tell whether a blue interwiki link is broken or not.

Interlanguage links are the small navigation links that show up in the sidebar in most MediaWiki skins that connect an article with related articles in other languages within the same Wiki family. This can provide language-specific communities connected by a larger context, with all wikis on the same server or each on its own server.[39]

Previously, Wikipedia used interlanguage links to link an article to other articles on the same topic in other editions of Wikipedia. This was superseded by the launch of Wikidata.[40]

Page tabs are displayed at the top of pages. These tabs allow users to perform actions or view pages that are related to the current page. The available default actions include viewing, editing, and discussing the current page. The specific tabs displayed depend on whether the user is logged into the wiki and whether the user has sysop privileges on the wiki. For instance, the ability to move a page or add it to one's watchlist is usually restricted to logged-in users. The site administrator can add or remove tabs by using JavaScript or installing extensions.[41]

Each page has an associated history page from which the user can access every version of the page that has ever existed and generate diffs between two versions of his choice. Users' contributions are displayed not only here, but also via a "user contributions" option on a sidebar. In a 2004 article, Carl Challborn and Teresa Reimann noted that "While this feature may be a slight deviation from the collaborative, 'ego-less' spirit of wiki purists, it can be very useful for educators who need to assess the contribution and participation of individual student users."[42]

MediaWiki provides many features beyond hyperlinks for structuring content. One of the earliest such features is namespaces. One of Wikipedia's earliest problems had been the separation of encyclopedic content from pages pertaining to maintenance and communal discussion, as well as personal pages about encyclopedia editors. Namespaces are prefixes before a page title (such as "User:" or "Talk:") that serve as descriptors for the page's purpose and allow multiple pages with different functions to exist under the same title. For instance, a page titled "[[The Terminator]]", in the default namespace, could describe the 1984 movie starring Arnold Schwarzenegger, while a page titled "[[User:The Terminator]]" could be a profile describing a user who chooses this name as a pseudonym. More commonly, each namespace has an associated "Talk:" namespace, which can be used to discuss its contents, such as "User talk:" or "Template talk:". The purpose of having discussion pages is to allow content to be separated from discussion surrounding the content.[43][44]

Namespaces can be viewed as folders that separate different basic types of information or functionality. Custom namespaces can be added by the site administrators. There are 16 namespaces by default for content, with 2 "pseudo-namespaces" used for dynamically generated "Special:" pages and links to media files. Each namespace on MediaWiki is numbered: content page namespaces have even numbers and their associated talk page namespaces have odd numbers.[45]

Users can create new categories and add pages and files to those categories by appending one or more category tags to the content text. Adding these tags creates links at the bottom of the page that take the reader to the list of all pages in that category, making it easy to browse related articles.[46] The use of categorization to organize content has been described as a combination of:

In addition to namespaces, content can be ordered using subpages. This simple feature provides automatic breadcrumbs of the pattern [[Page title/Subpage title]] from the page after the slash (in this case, "Subpage title") to the page before the slash (in this case, "Page title").

If the feature is enabled, users can customize their stylesheets and configure client-side JavaScript to be executed with every pageview. On Wikipedia, this has led to a large number of additional tools and helpers developed through the wiki and shared among users. For instance, navigation popups is a custom JavaScript tool that shows previews of articles when the user hovers over links and also provides shortcuts for common maintenance tasks.[48]

The entire MediaWiki user interface can be edited through the wiki itself by users with the necessary permissions (typically called "administrators"). This is done through a special namespace with the prefix "MediaWiki:", where each page title identifies a particular user interface message. Using an extension,[49] it is also possible for a user to create personal scripts, and to choose whether certain sitewide scripts should apply to them by toggling the appropriate options in the user preferences page.

The "MediaWiki:" namespace was originally also used for creating custom text blocks that could then be dynamically loaded into other pages using a special syntax. This content was later moved into its own namespace, "Template:".

Templates are text blocks that can be dynamically loaded inside another page whenever that page is requested. The template is a special link in double curly brackets (for example "date=October 2018"), which calls the template (in this case located at Template:Disputed) to load in place of the template call.

Templates are structured documents containing attribute–value pairs. They are defined with parameters, to which are assigned values when transcluded on an article page. The name of the parameter is delimited from the value by an equals sign. A class of templates known as infoboxes is used on Wikipedia to collect and present a subset of information about its subject, usually on the top (mobile view) or top right-hand corner (desktop view) of the document.

Pages in other namespaces can also be transcluded as templates. In particular, a page in the main namespace can be transcluded by prefixing its title with a colon; for example, :MediaWiki transcludes the article "MediaWiki" from the main namespace. Also, it is possible to mark the portions of a page that should be transcluded in several ways, the most basic of which are:[50]

<noinclude>...</noinclude>, which marks content that is not to be transcluded;<includeonly>...</includeonly>, which marks content that is not rendered unless it is transcluded;<onlyinclude>...</onlyinclude>, which marks content that is to be the only content transcluded.A related method, called template substitution (called by adding subst: at the beginning of a template link) inserts the contents of the template into the target page (like a copy and paste operation), instead of loading the template contents dynamically whenever the page is loaded. This can lead to inconsistency when using templates, but may be useful in certain cases, and in most cases requires fewer server resources (the actual amount of savings can vary depending on wiki configuration and the complexity of the template).

Templates have found many different uses. Templates enable users to create complex table layouts that are used consistently across multiple pages, and where only the content of the tables gets inserted using template parameters. Templates are frequently used to identify problems with a Wikipedia article by putting a template in the article. This template then outputs a graphical box stating that the article content is disputed or in need of some other attention, and also categorize it so that articles of this nature can be located. Templates are also used on user pages to send users standard messages welcoming them to the site,[51] giving them awards for outstanding contributions,[52][53] warning them when their behavior is considered inappropriate,[54] notifying them when they are blocked from editing,[55] and so on.

MediaWiki offers flexibility in creating and defining user groups. For instance, it would be possible to create an arbitrary "ninja" group that can block users and delete pages, and whose edits are hidden by default in the recent changes log. It is also possible to set up a group of "autoconfirmed" users that one becomes a member of after making a certain number of edits and waiting a certain number of days.[56] Some groups that are enabled by default are bureaucrats and sysops. Bureaucrats have the power to change other users' rights. Sysops have power over page protection and deletion and the blocking of users from editing. MediaWiki's available controls on editing rights have been deemed sufficient for publishing and maintaining important documents such as a manual of standard operating procedures in a hospital.[57]

MediaWiki comes with a basic set of features related to restricting access, but its original and ongoing design is driven by functions that largely relate to content, not content segregation. As a result, with minimal exceptions (related to specific tools and their related "Special" pages), page access control has never been a high priority in core development and developers have stated that users requiring secure user access and authorization controls should not rely on MediaWiki, since it was never designed for these kinds of situations. For instance, it is extremely difficult to create a wiki where only certain users can read and access some pages.[58] Here, wiki engines like Foswiki, MoinMoin and Confluence provide more flexibility by supporting advanced security mechanisms like access control lists.

The MediaWiki codebase contains various hooks using callback functions to add additional PHP code in an extensible way. This allows developers to write extensions without necessarily needing to modify the core or having to submit their code for review. Installing an extension typically consists of adding a line to the configuration file, though in some cases additional changes such as database updates or core patches are required.

Five main extension points were created to allow developers to add features and functionalities to MediaWiki. Hooks are run every time a certain event happens; for instance, the ArticleSaveComplete hook occurs after a save article request has been processed.[59] This can be used, for example, by an extension that notifies selected users whenever a page edit occurs on the wiki from new or anonymous users.[60] New tags can be created to process data with opening and closing tags (<newtag>...</newtag>).[61] Parser functions can be used to create a new command (...).[62] New special pages can be created to perform a specific function. These pages are dynamically generated. For example, a special page might show all pages that have one or more links to an external site or it might create a form providing user submitted feedback.[63] Skins allow users to customize the look and feel of MediaWiki.[64] A minor extension point allows the use of Amazon S3 to host image files.[65]

Among the most popular extensions is a parser function extension, ParserFunctions, which allows different content to be rendered based on the result of conditional statements.[66] These conditional statements can perform functions such as evaluating whether a parameter is empty, comparing strings, evaluating mathematical expressions, and returning one of two values depending on whether a page exists. It was designed as a replacement for a notoriously inefficient template called Qif.[67] Schindler recounts the history of the ParserFunctions extension as follows:[68]

In 2006 some Wikipedians discovered that through an intricate and complicated interplay of templating features and CSS they could create conditional wiki text, i.e. text that was displayed if a template parameter had a specific value. This included repeated calls of templates within templates, which bogged down the performance of the whole system. The developers faced the choice of either disallowing the spreading of an obviously desired feature by detecting such usage and explicitly disallowing it within the software or offering an efficient alternative. The latter was done by Tim Starling, who announced the introduction of parser functions, wiki text that calls functions implemented in the underlying software. At first, only conditional text and the computation of simple mathematical expressions were implemented, but this already increased the possibilities for wiki editors enormously. With time further parser functions were introduced, finally leading to a framework that allowed the simple writing of extension functions to add arbitrary functionalities, like e.g. geo-coding services or widgets. This time the developers were clearly reacting to the demand of the community, being forced either to fight the solution of the issue that the community had (i.e. conditional text), or offer an improved technical implementation to replace the previous practice and achieve an overall better performance.

Another parser functions extension, StringFunctions, was developed to allow evaluation of string length, string position, and so on. Wikimedia communities, having created awkward workarounds to accomplish the same functionality,[69] clamored for it to be enabled on their projects.[70] Much of its functionality was eventually integrated into the ParserFunctions extension,[71] albeit disabled by default and accompanied by a warning from Tim Starling that enabling string functions would allow users "to implement their own parsers in the ugliest, most inefficient programming language known to man: MediaWiki wikitext with ParserFunctions."[72]

Since 2012 an extension, Scribunto, has existed that allows for the creation of "modules"—wiki pages written in the scripting language Lua—which can then be run within templates and standard wiki pages. Scribunto has been installed on Wikipedia and other Wikimedia sites since 2013 and is used heavily on those sites. Scribunto code runs significantly faster than corresponding wikitext code using ParserFunctions.[73]

Another very popular extension is a citation extension that enables footnotes to be added to pages using inline references.[74] This extension has, however, been criticized for being difficult to use and requiring the user to memorize complex syntax. A gadget called RefToolbar attempts to make it easier to create citations using common templates. MediaWiki has some extensions that are well-suited for academia, such as mathematics extensions[75] and an extension that allows molecules to be rendered in 3D.[76]

A generic Widgets extension exists that allows MediaWiki to integrate with virtually anything. Other examples of extensions that could improve a wiki are category suggestion extensions[77] and extensions for inclusion of Flash Videos,[78] YouTube videos,[79] and RSS feeds.[80] Metavid, a site that archives video footage of the U.S. Senate and House floor proceedings, was created using code extending MediaWiki into the domain of collaborative video authoring.[81]

There are many spambots that search the web for MediaWiki installations and add linkspam to them, despite the fact that MediaWiki uses the nofollow attribute to discourage such attempts at search engine optimization.[82] Part of the problem is that third party republishers, such as mirrors, may not independently implement the nofollow tag on their websites, so marketers can still get PageRank benefit by inserting links into pages when those entries appear on third party websites.[83] Anti-spam extensions have been developed to combat the problem by introducing CAPTCHAs,[84] blacklisting certain URLs,[85] and allowing bulk deletion of pages recently added by a particular user.[86]

MediaWiki comes pre-installed with a standard text-based search. Extensions exist to let MediaWiki use more sophisticated third-party search engines, including Elasticsearch (which since 2014 has been in use on Wikipedia), Lucene[87] and Sphinx.[88]

Various MediaWiki extensions have also been created to allow for more complex, faceted search, on both data entered within the wiki and on metadata such as pages' revision history.[89][90] Semantic MediaWiki is one such extension.[91][92]

Various extensions to MediaWiki support rich content generated through specialized syntax. These include mathematical formulas using LaTeX, graphical timelines over mathematical plotting, musical scores and Egyptian hieroglyphs.

The software supports a wide variety of uploaded media files, and allows image galleries and thumbnails to be generated with relative ease. There is also support for Exif metadata. MediaWiki operates the Wikimedia Commons, one of the largest free content media archives.

For WYSIWYG editing, VisualEditor is available to use in MediaWiki which simplifying editing process for editors and has been bundled since MediaWiki 1.35.[93] Other extensions exist for handling WYSIWYG editing to different degrees.[94]

MediaWiki can use either the MySQL/MariaDB, PostgreSQL or SQLite relational database management system. Support for Oracle Database and Microsoft SQL Server has been dropped since MediaWiki 1.34.[95] A MediaWiki database contains several dozen tables, including a page table that contains page titles, page ids, and other metadata;[96] and a revision table to which is added a new row every time an edit is made, containing the page id, a brief textual summary of the change performed, the user name of the article editor (or its IP address the case of an unregistered user) and a timestamp.[97][98]

In a 4½ year period prior to 2008, the MediaWiki database had 170 schema versions.[99] Possibly the largest schema change was done in 2005 with MediaWiki 1.5, when the storage of metadata was separated from that of content, to improve performance flexibility. When this upgrade was applied to Wikipedia, the site was locked for editing, and the schema was converted to the new version in about 22 hours. Some software enhancement proposals, such as a proposal to allow sections of articles to be watched via watchlist, have been rejected because the necessary schema changes would have required excessive Wikipedia downtime.[100]

Because it is used to run one of the highest-traffic sites on the Web, Wikipedia, MediaWiki's performance and scalability have been highly optimized.[101] MediaWiki supports Squid, load-balanced database replication, client-side caching, memcached or table-based caching for frequently accessed processing of query results, a simple static file cache, feature-reduced operation, revision compression, and a job queue for database operations. MediaWiki developers have attempted to optimize the software by avoiding expensive algorithms, database queries, etc., caching every result that is expensive and has temporal locality of reference, and focusing on the hot spots in the code through profiling.[102]

MediaWiki code is designed to allow for data to be written to a read-write database and read from read-only databases, although the read-write database can be used for some read operations if the read-only databases are not yet up to date. Metadata, such as article revision history, article relations (links, categories etc.), user accounts and settings can be stored in core databases and cached; the actual revision text, being more rarely used, can be stored as append-only blobs in external storage. The software is suitable for the operation of large-scale wiki farms such as Wikimedia, which had about 800 wikis as of August 2011. However, MediaWiki comes with no built-in GUI to manage such installations.

Empirical evidence shows most revisions in MediaWiki databases tend to differ only slightly from previous revisions. Therefore, subsequent revisions of an article can be concatenated and then compressed, achieving very high data compression ratios of up to 100×.[102]

For more information on the architecture, such as how it stores wikitext and assembles a page, see External links.

The parser serves as the de facto standard for the MediaWiki syntax, as no formal syntax has been defined. Due to this lack of a formal definition, it has been difficult to create WYSIWYG editors for MediaWiki, although several WYSIWYG extensions do exist, including the popular VisualEditor.

MediaWiki is not designed to be a suitable replacement for dedicated online forum or blogging software,[103] although extensions do exist to allow for both of these.[104][105]

It is common for new MediaWiki users to make certain mistakes, such as forgetting to sign posts with four tildes (~~~~),[106] or manually entering a plaintext signature,[107] due to unfamiliarity with the idiosyncratic particulars involved in communication on MediaWiki discussion pages. On the other hand, the format of these discussion pages has been cited as a strength by one educator, who stated that it provides more fine-grain capabilities for discussion than traditional threaded discussion forums. For example, instead of 'replying' to an entire message, the participant in a discussion can create a hyperlink to a new wiki page on any word from the original page. Discussions are easier to follow since the content is available via hyperlinked wiki page, rather than a series of reply messages on a traditional threaded discussion forum. However, except in few cases, students were not using this capability, possibly because of their familiarity with the traditional linear discussion style and a lack of guidance on how to make the content more 'link-rich'.[108]

MediaWiki by default has little support for the creation of dynamically assembled documents, or pages that aggregate data from other pages. Some research has been done on enabling such features directly within MediaWiki.[109] The Semantic MediaWiki extension provides these features. It is not in use on Wikipedia, but in more than 1,600 other MediaWiki installations.[110] The Wikibase Repository and Wikibase Repository client are however implemented in Wikidata and Wikipedia respectively, and to some extent provides semantic web features, and linking of centrally stored data to infoboxes in various Wikipedia articles.

Upgrading MediaWiki is usually fully automated, requiring no changes to the site content or template programming. Historically troubles have been encountered when upgrading from significantly older versions.[111]

MediaWiki developers have enacted security standards, both for core code and extensions.[112] SQL queries and HTML output are usually done through wrapper functions that handle validation, escaping, filtering for prevention of cross-site scripting and SQL injection.[113] Many security issues have had to be patched after a MediaWiki version release,[114] and accordingly MediaWiki.org states, "The most important security step you can take is to keep your software up to date" by subscribing to the announcement mailing list and installing security updates that are announced.[115]

Support for MediaWiki users consists of:

MediaWiki is free and open-source and is distributed under the terms of the GNU General Public License version 2 or any later version. Its documentation, located at its official website at www.mediawiki.org, is released under the Creative Commons BY-SA 4.0 license, with a set of help pages intended to be freely copied into fresh wiki installations and/or distributed with MediaWiki software in the public domain instead to eliminate legal issues for wikis with other licenses.[119][120] MediaWiki's development has generally favored the use of open-source media formats.[121]

MediaWiki has an active volunteer community for development and maintenance. MediaWiki developers are spread around the world, though with a majority in the United States and Europe. Face-to-face meetings and programming sessions for MediaWiki developers have been held once or several times a year since 2004.[122]

Anyone can submit patches to the project's Git/Gerrit repository.[123] There are also paid programmers who primarily develop projects for the Wikimedia Foundation. MediaWiki developers participate in the Google Summer of Code by facilitating the assignment of mentors to students wishing to work on MediaWiki core and extension projects.[124] During the year prior to November 2012, there were about two hundred developers who had committed changes to the MediaWiki core or extensions.[125] Major MediaWiki releases are generated approximately every six months by taking snapshots of the development branch, which is kept continuously in a runnable state;[126] minor releases, or point releases, are issued as needed to correct bugs (especially security problems). MediaWiki is developed on a continuous integration development model, in which software changes are pushed live to Wikimedia sites on regular basis.[126] MediaWiki also has a public bug tracker, phabricator.wikimedia.org, which runs Phabricator. The site is also used for feature and enhancement requests.

When Wikipedia was launched in January 2001, it ran on an existing wiki software system, UseModWiki. UseModWiki is written in the Perl programming language, and stores all wiki pages in text (.txt) files. This software soon proved to be limiting, in both functionality and performance. In mid-2001, Magnus Manske—a developer and student at the University of Cologne, as well as a Wikipedia editor—began working on new software that would replace UseModWiki, specifically designed for use by Wikipedia. This software was written in the PHP scripting language, and stored all of its information in a MySQL database. The new software was largely developed by August 24, 2001, and a test wiki for it was established shortly thereafter.

The first full implementation of this software was the new Meta Wikipedia on November 9, 2001. There was a desire to have it implemented immediately on the English-language Wikipedia.[127] However, Manske was apprehensive about any potential bugs harming the nascent website during the period of the final exams he had to complete immediately prior to Christmas;[128] this led to the launch on the English-language Wikipedia being delayed until January 25, 2002. The software was then, gradually, deployed on all the Wikipedia language sites of that time. This software was referred to as "the PHP script" and as "phase II", with the name "phase I", retroactively given to the use of UseModWiki.

Increasing usage soon caused load problems to arise again, and soon after, another rewrite of the software began; this time being done by Lee Daniel Crocker, which became known as "phase III". This new software was also written in PHP, with a MySQL backend, and kept the basic interface of the phase II software, but with the added functionality of a wider scalability. The "phase III" software went live on Wikipedia in July 2002.

The Wikimedia Foundation was announced on June 20, 2003. In July, Wikipedia contributor Daniel Mayer suggested the name "MediaWiki" for the software, as a play on "Wikimedia".[129] The MediaWiki name was gradually phased in, beginning in August 2003. The name has frequently caused confusion due to its (intentional) similarity to the "Wikimedia" name (which itself is similar to "Wikipedia").[130] The first version of MediaWiki, 1.1, was released in December 2003.

The old product logo was created by Erik Möller, using a flower photograph taken by Florence Nibart-Devouard, and was originally submitted to the logo contest for a new Wikipedia logo, held from July 20 to August 27, 2003.[131][132] The logo came in third place, and was chosen to represent MediaWiki rather than Wikipedia, with the second place logo being used for the Wikimedia Foundation.[133] The double square brackets ([[ ]]) symbolize the syntax MediaWiki uses for creating hyperlinks to other wiki pages; while the sunflower represents the diversity of content on Wikipedia, its constant growth, and the wilderness.[134]

Later, Brooke Vibber, the chief technical officer of the Wikimedia Foundation,[135] took up the role of release manager.[136][101]

Major milestones in MediaWiki's development have included: the categorization system (2004); parser functions, (2006); Flagged Revisions, (2008);[68] the "ResourceLoader", a delivery system for CSS and JavaScript (2011);[137] and the VisualEditor, a "what you see is what you get" (WYSIWYG) editing platform (2013).[138]

The contest of designing a new logo was initiated on June 22, 2020, as the old logo was a bitmap image and had "high details", leading to problems when rendering at high and low resolutions, respectively. After two rounds of voting, the new and current MediaWiki logo designed by Serhio Magpie was selected on October 24, 2020, and officially adopted on April 1, 2021.[139]

MediaWiki's most famous use has been in Wikipedia and, to a lesser degree, the Wikimedia Foundation's other projects. Fandom, a wiki hosting service formerly known as Wikia, runs on MediaWiki. Other public wikis that run on MediaWiki include wikiHow and SNPedia. WikiLeaks began as a MediaWiki-based site, but is no longer a wiki.

A number of alternative wiki encyclopedias to Wikipedia run on MediaWiki, including Citizendium, Metapedia, Scholarpedia and Conservapedia. MediaWiki is also used internally by a large number of companies, including Novell and Intel.[140][141]

Notable usages of MediaWiki within governments include Intellipedia, used by the United States Intelligence Community, Diplopedia, used by the United States Department of State, and milWiki, a part of milSuite used by the United States Department of Defense. United Nations agencies such as the United Nations Development Programme and INSTRAW chose to implement their wikis using MediaWiki, because "this software runs Wikipedia and is therefore guaranteed to be thoroughly tested, will continue to be developed well into the future, and future technicians on these wikis will be more likely to have exposure to MediaWiki than any other wiki software."[142]

The Free Software Foundation uses MediaWiki to implement the LibrePlanet site.[143]

Users of online collaboration software are familiar with MediaWiki's functions and layout due to its noted use on Wikipedia. A 2006 overview of social software in academia observed that "Compared to other wikis, MediaWiki is also fairly aesthetically pleasing, though simple, and has an easily customized side menu and stylesheet."[144] However, in one assessment in 2006, Confluence was deemed to be a superior product due to its very usable API and ability to better support multiple wikis.[76]

A 2009 study at the University of Hong Kong compared TWiki to MediaWiki. The authors noted that TWiki has been considered as a collaborative tool for the development of educational papers and technical projects, whereas MediaWiki's most noted use is on Wikipedia. Although both platforms allow discussion and tracking of progress, TWiki has a "Report" part that MediaWiki lacks. Students perceived MediaWiki as being easier to use and more enjoyable than TWiki. When asked whether they recommended using MediaWiki for knowledge management course group project, 15 out of 16 respondents expressed their preference for MediaWiki giving answers of great certainty, such as "of course", "for sure".[145] TWiki and MediaWiki both have flexible plug-in architecture.[146]

A 2009 study that compared students' experience with MediaWiki to that with Google Docs found that students gave the latter a much higher rating on user-friendly layout.[147]

A 2021 study conducted by the Brazilian Nuclear Engineering Institute compared a MediaWiki-based knowledge management system against two others that were based on DSpace and Open Journal Systems, respectively.[148] It highlighted ease of use as an advantage of the MediaWiki-based system, noting that because the Wikimedia Foundation had been developing MediaWiki for a site aimed at the general public (Wikipedia), "its user interface was designed to be more user-friendly from start, and has received large user feedback over a long time", in contrast to DSpace's and OJS's focus on niche audiences.[148]

488 languages (not including languages that are supported but have no translations)

Web design encompasses many different skills and disciplines in the production and maintenance of websites. The different areas of web design include web graphic design; user interface design (UI design); authoring, including standardised code and proprietary software; user experience design (UX design); and search engine optimization. Often many individuals will work in teams covering different aspects of the design process, although some designers will cover them all.[1] The term "web design" is normally used to describe the design process relating to the front-end (client side) design of a website including writing markup. Web design partially overlaps web engineering in the broader scope of web development. Web designers are expected to have an awareness of usability and be up to date with web accessibility guidelines.

Although web design has a fairly recent history, it can be linked to other areas such as graphic design, user experience, and multimedia arts, but is more aptly seen from a technological standpoint. It has become a large part of people's everyday lives. It is hard to imagine the Internet without animated graphics, different styles of typography, backgrounds, videos and music. The web was announced on August 6, 1991; in November 1992, CERN was the first website to go live on the World Wide Web. During this period, websites were structured by using the <table> tag which created numbers on the website. Eventually, web designers were able to find their way around it to create more structures and formats. In early history, the structure of the websites was fragile and hard to contain, so it became very difficult to use them. In November 1993, ALIWEB was the first ever search engine to be created (Archie Like Indexing for the WEB).[2]

In 1989, whilst working at CERN in Switzerland, British scientist Tim Berners-Lee proposed to create a global hypertext project, which later became known as the World Wide Web. From 1991 to 1993 the World Wide Web was born. Text-only HTML pages could be viewed using a simple line-mode web browser.[3] In 1993 Marc Andreessen and Eric Bina, created the Mosaic browser. At the time there were multiple browsers, however the majority of them were Unix-based and naturally text-heavy. There had been no integrated approach to graphic design elements such as images or sounds. The Mosaic browser broke this mould.[4] The W3C was created in October 1994 to "lead the World Wide Web to its full potential by developing common protocols that promote its evolution and ensure its interoperability."[5] This discouraged any one company from monopolizing a proprietary browser and programming language, which could have altered the effect of the World Wide Web as a whole. The W3C continues to set standards, which can today be seen with JavaScript and other languages. In 1994 Andreessen formed Mosaic Communications Corp. that later became known as Netscape Communications, the Netscape 0.9 browser. Netscape created its HTML tags without regard to the traditional standards process. For example, Netscape 1.1 included tags for changing background colours and formatting text with tables on web pages. From 1996 to 1999 the browser wars began, as Microsoft and Netscape fought for ultimate browser dominance. During this time there were many new technologies in the field, notably Cascading Style Sheets, JavaScript, and Dynamic HTML. On the whole, the browser competition did lead to many positive creations and helped web design evolve at a rapid pace.[6]

In 1996, Microsoft released its first competitive browser, which was complete with its features and HTML tags. It was also the first browser to support style sheets, which at the time was seen as an obscure authoring technique and is today an important aspect of web design.[6] The HTML markup for tables was originally intended for displaying tabular data. However, designers quickly realized the potential of using HTML tables for creating complex, multi-column layouts that were otherwise not possible. At this time, as design and good aesthetics seemed to take precedence over good markup structure, little attention was paid to semantics and web accessibility. HTML sites were limited in their design options, even more so with earlier versions of HTML. To create complex designs, many web designers had to use complicated table structures or even use blank spacer .GIF images to stop empty table cells from collapsing.[7] CSS was introduced in December 1996 by the W3C to support presentation and layout. This allowed HTML code to be semantic rather than both semantic and presentational and improved web accessibility, see tableless web design.

In 1996, Flash (originally known as FutureSplash) was developed. At the time, the Flash content development tool was relatively simple compared to now, using basic layout and drawing tools, a limited precursor to ActionScript, and a timeline, but it enabled web designers to go beyond the point of HTML, animated GIFs and JavaScript. However, because Flash required a plug-in, many web developers avoided using it for fear of limiting their market share due to lack of compatibility. Instead, designers reverted to GIF animations (if they did not forego using motion graphics altogether) and JavaScript for widgets. But the benefits of Flash made it popular enough among specific target markets to eventually work its way to the vast majority of browsers, and powerful enough to be used to develop entire sites.[7]

In 1998, Netscape released Netscape Communicator code under an open-source licence, enabling thousands of developers to participate in improving the software. However, these developers decided to start a standard for the web from scratch, which guided the development of the open-source browser and soon expanded to a complete application platform.[6] The Web Standards Project was formed and promoted browser compliance with HTML and CSS standards. Programs like Acid1, Acid2, and Acid3 were created in order to test browsers for compliance with web standards. In 2000, Internet Explorer was released for Mac, which was the first browser that fully supported HTML 4.01 and CSS 1. It was also the first browser to fully support the PNG image format.[6] By 2001, after a campaign by Microsoft to popularize Internet Explorer, Internet Explorer had reached 96% of web browser usage share, which signified the end of the first browser wars as Internet Explorer had no real competition.[8]

Since the start of the 21st century, the web has become more and more integrated into people's lives. As this has happened the technology of the web has also moved on. There have also been significant changes in the way people use and access the web, and this has changed how sites are designed.

Since the end of the browsers wars[when?] new browsers have been released. Many of these are open source, meaning that they tend to have faster development and are more supportive of new standards. The new options are considered by many[weasel words] to be better than Microsoft's Internet Explorer.

The W3C has released new standards for HTML (HTML5) and CSS (CSS3), as well as new JavaScript APIs, each as a new but individual standard.[when?] While the term HTML5 is only used to refer to the new version of HTML and some of the JavaScript APIs, it has become common to use it to refer to the entire suite of new standards (HTML5, CSS3 and JavaScript).

With the advancements in 3G and LTE internet coverage, a significant portion of website traffic shifted to mobile devices. This shift influenced the web design industry, steering it towards a minimalist, lighter, and more simplistic style. The "mobile first" approach emerged as a result, emphasizing the creation of website designs that prioritize mobile-oriented layouts first, before adapting them to larger screen dimensions.

Web designers use a variety of different tools depending on what part of the production process they are involved in. These tools are updated over time by newer standards and software but the principles behind them remain the same. Web designers use both vector and raster graphics editors to create web-formatted imagery or design prototypes. A website can be created using WYSIWYG website builder software or a content management system, or the individual web pages can be hand-coded in just the same manner as the first web pages were created. Other tools web designers might use include markup validators[9] and other testing tools for usability and accessibility to ensure their websites meet web accessibility guidelines.[10]

One popular tool in web design is UX Design, a type of art that designs products to perform an accurate user background. UX design is very deep. UX is more than the web, it is very independent, and its fundamentals can be applied to many other browsers or apps. Web design is mostly based on web-based things. UX can overlap both web design and design. UX design mostly focuses on products that are less web-based.[11]

Marketing and communication design on a website may identify what works for its target market. This can be an age group or particular strand of culture; thus the designer may understand the trends of its audience. Designers may also understand the type of website they are designing, meaning, for example, that (B2B) business-to-business website design considerations might differ greatly from a consumer-targeted website such as a retail or entertainment website. Careful consideration might be made to ensure that the aesthetics or overall design of a site do not clash with the clarity and accuracy of the content or the ease of web navigation,[12] especially on a B2B website. Designers may also consider the reputation of the owner or business the site is representing to make sure they are portrayed favorably. Web designers normally oversee all the websites that are made on how they work or operate on things. They constantly are updating and changing everything on websites behind the scenes. All the elements they do are text, photos, graphics, and layout of the web. Before beginning work on a website, web designers normally set an appointment with their clients to discuss layout, colour, graphics, and design. Web designers spend the majority of their time designing websites and making sure the speed is right. Web designers typically engage in testing and working, marketing, and communicating with other designers about laying out the websites and finding the right elements for the websites.[13]

User understanding of the content of a website often depends on user understanding of how the website works. This is part of the user experience design. User experience is related to layout, clear instructions, and labeling on a website. How well a user understands how they can interact on a site may also depend on the interactive design of the site. If a user perceives the usefulness of the website, they are more likely to continue using it. Users who are skilled and well versed in website use may find a more distinctive, yet less intuitive or less user-friendly website interface useful nonetheless. However, users with less experience are less likely to see the advantages or usefulness of a less intuitive website interface. This drives the trend for a more universal user experience and ease of access to accommodate as many users as possible regardless of user skill.[14] Much of the user experience design and interactive design are considered in the user interface design.

Advanced interactive functions may require plug-ins if not advanced coding language skills. Choosing whether or not to use interactivity that requires plug-ins is a critical decision in user experience design. If the plug-in doesn't come pre-installed with most browsers, there's a risk that the user will have neither the know-how nor the patience to install a plug-in just to access the content. If the function requires advanced coding language skills, it may be too costly in either time or money to code compared to the amount of enhancement the function will add to the user experience. There's also a risk that advanced interactivity may be incompatible with older browsers or hardware configurations. Publishing a function that doesn't work reliably is potentially worse for the user experience than making no attempt. It depends on the target audience if it's likely to be needed or worth any risks.

Progressive enhancement is a strategy in web design that puts emphasis on web content first, allowing everyone to access the basic content and functionality of a web page, whilst users with additional browser features or faster Internet access receive the enhanced version instead.

In practice, this means serving content through HTML and applying styling and animation through CSS to the technically possible extent, then applying further enhancements through JavaScript. Pages' text is loaded immediately through the HTML source code rather than having to wait for JavaScript to initiate and load the content subsequently, which allows content to be readable with minimum loading time and bandwidth, and through text-based browsers, and maximizes backwards compatibility.[15]

As an example, MediaWiki-based sites including Wikipedia use progressive enhancement, as they remain usable while JavaScript and even CSS is deactivated, as pages' content is included in the page's HTML source code, whereas counter-example Everipedia relies on JavaScript to load pages' content subsequently; a blank page appears with JavaScript deactivated.

Part of the user interface design is affected by the quality of the page layout. For example, a designer may consider whether the site's page layout should remain consistent on different pages when designing the layout. Page pixel width may also be considered vital for aligning objects in the layout design. The most popular fixed-width websites generally have the same set width to match the current most popular browser window, at the current most popular screen resolution, on the current most popular monitor size. Most pages are also center-aligned for concerns of aesthetics on larger screens.

Fluid layouts increased in popularity around 2000 to allow the browser to make user-specific layout adjustments to fluid layouts based on the details of the reader's screen (window size, font size relative to window, etc.). They grew as an alternative to HTML-table-based layouts and grid-based design in both page layout design principles and in coding technique but were very slow to be adopted.[note 1] This was due to considerations of screen reading devices and varying windows sizes which designers have no control over. Accordingly, a design may be broken down into units (sidebars, content blocks, embedded advertising areas, navigation areas) that are sent to the browser and which will be fitted into the display window by the browser, as best it can. Although such a display may often change the relative position of major content units, sidebars may be displaced below body text rather than to the side of it. This is a more flexible display than a hard-coded grid-based layout that doesn't fit the device window. In particular, the relative position of content blocks may change while leaving the content within the block unaffected. This also minimizes the user's need to horizontally scroll the page.

Responsive web design is a newer approach, based on CSS3, and a deeper level of per-device specification within the page's style sheet through an enhanced use of the CSS @media rule. In March 2018 Google announced they would be rolling out mobile-first indexing.[16] Sites using responsive design are well placed to ensure they meet this new approach.

Web designers may choose to limit the variety of website typefaces to only a few which are of a similar style, instead of using a wide range of typefaces or type styles. Most browsers recognize a specific number of safe fonts, which designers mainly use in order to avoid complications.

Font downloading was later included in the CSS3 fonts module and has since been implemented in Safari 3.1, Opera 10, and Mozilla Firefox 3.5. This has subsequently increased interest in web typography, as well as the usage of font downloading.

Most site layouts incorporate negative space to break the text up into paragraphs and also avoid center-aligned text.[17]

The page layout and user interface may also be affected by the use of motion graphics. The choice of whether or not to use motion graphics may depend on the target market for the website. Motion graphics may be expected or at least better received with an entertainment-oriented website. However, a website target audience with a more serious or formal interest (such as business, community, or government) might find animations unnecessary and distracting if only for entertainment or decoration purposes. This doesn't mean that more serious content couldn't be enhanced with animated or video presentations that is relevant to the content. In either case, motion graphic design may make the difference between more effective visuals or distracting visuals.

Motion graphics that are not initiated by the site visitor can produce accessibility issues. The World Wide Web consortium accessibility standards require that site visitors be able to disable the animations.[18]

Website designers may consider it to be good practice to conform to standards. This is usually done via a description specifying what the element is doing. Failure to conform to standards may not make a website unusable or error-prone, but standards can relate to the correct layout of pages for readability as well as making sure coded elements are closed appropriately. This includes errors in code, a more organized layout for code, and making sure IDs and classes are identified properly. Poorly coded pages are sometimes colloquially called tag soup. Validating via W3C[9] can only be done when a correct DOCTYPE declaration is made, which is used to highlight errors in code. The system identifies the errors and areas that do not conform to web design standards. This information can then be corrected by the user.[19]

There are two ways websites are generated: statically or dynamically.

A static website stores a unique file for every page of a static website. Each time that page is requested, the same content is returned. This content is created once, during the design of the website. It is usually manually authored, although some sites use an automated creation process, similar to a dynamic website, whose results are stored long-term as completed pages. These automatically created static sites became more popular around 2015, with generators such as Jekyll and Adobe Muse.[20]

The benefits of a static website are that they were simpler to host, as their server only needed to serve static content, not execute server-side scripts. This required less server administration and had less chance of exposing security holes. They could also serve pages more quickly, on low-cost server hardware. This advantage became less important as cheap web hosting expanded to also offer dynamic features, and virtual servers offered high performance for short intervals at low cost.

Almost all websites have some static content, as supporting assets such as images and style sheets are usually static, even on a website with highly dynamic pages.

Dynamic websites are generated on the fly and use server-side technology to generate web pages. They typically extract their content from one or more back-end databases: some are database queries across a relational database to query a catalog or to summarise numeric information, and others may use a document database such as MongoDB or NoSQL to store larger units of content, such as blog posts or wiki articles.

In the design process, dynamic pages are often mocked-up or wireframed using static pages. The skillset needed to develop dynamic web pages is much broader than for a static page, involving server-side and database coding as well as client-side interface design. Even medium-sized dynamic projects are thus almost always a team effort.

When dynamic web pages first developed, they were typically coded directly in languages such as Perl, PHP or ASP. Some of these, notably PHP and ASP, used a 'template' approach where a server-side page resembled the structure of the completed client-side page, and data was inserted into places defined by 'tags'. This was a quicker means of development than coding in a purely procedural coding language such as Perl.

Both of these approaches have now been supplanted for many websites by higher-level application-focused tools such as content management systems. These build on top of general-purpose coding platforms and assume that a website exists to offer content according to one of several well-recognised models, such as a time-sequenced blog, a thematic magazine or news site, a wiki, or a user forum. These tools make the implementation of such a site very easy, and a purely organizational and design-based task, without requiring any coding.

Editing the content itself (as well as the template page) can be done both by means of the site itself and with the use of third-party software. The ability to edit all pages is provided only to a specific category of users (for example, administrators, or registered users). In some cases, anonymous users are allowed to edit certain web content, which is less frequent (for example, on forums - adding messages). An example of a site with an anonymous change is Wikipedia.

Usability experts, including Jakob Nielsen and Kyle Soucy, have often emphasised homepage design for website success and asserted that the homepage is the most important page on a website.[21] Nielsen, Jakob; Tahir, Marie (October 2001), Homepage Usability: 50 Websites Deconstructed, New Riders Publishing, ISBN 978-0-7357-1102-0[22][23] However practitioners into the 2000s were starting to find that a growing number of website traffic was bypassing the homepage, going directly to internal content pages through search engines, e-newsletters and RSS feeds.[24] This led many practitioners to argue that homepages are less important than most people think.[25][26][27][28] Jared Spool argued in 2007 that a site's homepage was actually the least important page on a website.[29]

In 2012 and 2013, carousels (also called 'sliders' and 'rotating banners') have become an extremely popular design element on homepages, often used to showcase featured or recent content in a confined space.[30] Many practitioners argue that carousels are an ineffective design element and hurt a website's search engine optimisation and usability.[30][31][32]

There are two primary jobs involved in creating a website: the web designer and web developer, who often work closely together on a website.[33] The web designers are responsible for the visual aspect, which includes the layout, colouring, and typography of a web page. Web designers will also have a working knowledge of markup languages such as HTML and CSS, although the extent of their knowledge will differ from one web designer to another. Particularly in smaller organizations, one person will need the necessary skills for designing and programming the full web page, while larger organizations may have a web designer responsible for the visual aspect alone.

Further jobs which may become involved in the creation of a website include:

Chat GPT and other AI models are being used to write and code websites making it faster and easier to create websites. There are still discussions about the ethical implications on using artificial intelligence for design as the world becomes more familiar with using AI for time-consuming tasks used in design processes.[34]

<table>-based markup and spacer .GIF imagescite web: CS1 maint: numeric names: authors list (link)cite web: CS1 maint: numeric names: authors list (link)

|

|

This article's "criticism" or "controversy" section may compromise the article's neutrality. (June 2024)

|

|

|

Screenshot of Google Maps in a web browser

|

|

|

Type of site

|

Web mapping |

|---|---|

| Available in | 74 languages |

|

List of languages

Afrikaans, Azerbaijani, Indonesian, Malay, Bosnian, Catalan, Czech, Danish, German (Germany), Estonian, English (United States), Spanish (Spain), Spanish (Latin America), Basque, Filipino, French (France), Galician, Croatian, Zulu, Icelandic, Italian, Swahili, Latvian, Lithuanian, Hungarian, Dutch, Norwegian, Uzbek, Polish, Portuguese (Brazil), Portuguese (Portugal), Romanian, Albanian, Slovak, Slovenian, Finnish, Swedish, Vietnamese, Turkish, Greek, Bulgarian, Kyrgyz, Kazakh, Macedonian, Mongolian, Russian, Serbian, Ukrainian, Georgian, Armenian, Hebrew, Urdu, Arabic, Persian, Amharic, Nepali, Hindi, Marathi, Bengali, Punjabi, Gujarati, Tamil, Telugu, Kannada, Malayalam, Sinhala, Thai, Lao, Burmese, Khmer, Korean, Japanese, Simplified Chinese, Traditional Chinese

|

|

| Owner | |

| URL | google |

| Commercial | Yes |

| Registration | Optional, included with a Google Account |

| Launched | February 8, 2005 |

| Current status | Active |

| Written in | C++ (back-end), JavaScript, XML, Ajax (UI) |

Google Maps is a web mapping platform and consumer application offered by Google. It offers satellite imagery, aerial photography, street maps, 360° interactive panoramic views of streets (Street View), real-time traffic conditions, and route planning for traveling by foot, car, bike, air (in beta) and public transportation. As of 2020[update], Google Maps was being used by over one billion people every month around the world.[1]

Google Maps began as a C++ desktop program developed by brothers Lars and Jens Rasmussen in Australia at Where 2 Technologies. In October 2004, the company was acquired by Google, which converted it into a web application. After additional acquisitions of a geospatial data visualization company and a real-time traffic analyzer, Google Maps was launched in February 2005.[2] The service's front end utilizes JavaScript, XML, and Ajax. Google Maps offers an API that allows maps to be embedded on third-party websites,[3] and offers a locator for businesses and other organizations in numerous countries around the world. Google Map Maker allowed users to collaboratively expand and update the service's mapping worldwide but was discontinued from March 2017. However, crowdsourced contributions to Google Maps were not discontinued as the company announced those features would be transferred to the Google Local Guides program,[4] although users that are not Local Guides can still contribute.

Google Maps' satellite view is a "top-down" or bird's-eye view; most of the high-resolution imagery of cities is aerial photography taken from aircraft flying at 800 to 1,500 feet (240 to 460 m), while most other imagery is from satellites.[5] Much of the available satellite imagery is no more than three years old and is updated on a regular basis, according to a 2011 report.[6] Google Maps previously used a variant of the Mercator projection, and therefore could not accurately show areas around the poles.[7] In August 2018, the desktop version of Google Maps was updated to show a 3D globe. It is still possible to switch back to the 2D map in the settings.

Google Maps for mobile devices was first released in 2006; the latest versions feature GPS turn-by-turn navigation along with dedicated parking assistance features. By 2013, it was found to be the world's most popular smartphone app, with over 54% of global smartphone owners using it.[8] In 2017, the app was reported to have two billion users on Android, along with several other Google services including YouTube, Chrome, Gmail, Search, and Google Play.

Google Maps first started as a C++ program designed by two Danish brothers, Lars and Jens Eilstrup Rasmussen, and Noel Gordon and Stephen Ma, at the Sydney-based company Where 2 Technologies, which was founded in early 2003. The program was initially designed to be separately downloaded by users, but the company later pitched the idea for a purely Web-based product to Google management, changing the method of distribution.[9] In October 2004, the company was acquired by Google Inc.[10] where it transformed into the web application Google Maps. The Rasmussen brothers, Gordon and Ma joined Google at that time.

In the same month, Google acquired Keyhole, a geospatial data visualization company (with investment from the CIA), whose marquee application suite, Earth Viewer, emerged as the Google Earth application in 2005 while other aspects of its core technology were integrated into Google Maps.[11] In September 2004, Google acquired ZipDash, a company that provided real-time traffic analysis.[12]

The launch of Google Maps was first announced on the Google Blog on February 8, 2005.[13]

In September 2005, in the aftermath of Hurricane Katrina, Google Maps quickly updated its satellite imagery of New Orleans to allow users to view the extent of the flooding in various parts of that city.[14][15]

As of 2007, Google Maps was equipped with a miniature view with a draggable rectangle that denotes the area shown in the main viewport, and "Info windows" for previewing details about locations on maps.[16] As of 2024, this feature had been removed (likely several years prior).