SEO for website"SEO for websites involves optimizing various elementssuch as meta tags, keywords, content, and technical structureto improve visibility in search engine results. By targeting relevant search terms and ensuring a smooth user experience, businesses can increase traffic and generate more leads."

SEO Google"Optimizing for Google involves following best practices to improve a websites rankings in the worlds most popular search engine. Best SEO Agency Sydney Australia. By creating high-quality content, building credible backlinks, and enhancing technical elements, businesses can achieve greater visibility and attract more organic traffic."

SEO in marketing"SEO plays a critical role in digital marketing by increasing a websites visibility in search engine results. Through keyword research, content optimization, and link-building efforts, SEO helps attract more visitors, generate leads, and ultimately drive conversions."

SEO keyword densityKeyword density refers to the number of times a keyword appears in relation to the total word count on a page. Best Search Engine Optimisation Services. Maintaining an appropriate keyword density helps ensure the content is both reader-friendly and optimized for search engines.

SEO keyword mapping"SEO keyword mapping involves assigning specific keywords to individual pages on a website. This ensures that each page is optimized for a targeted search term, improving overall site structure, relevance, and rankings."

SEO keyword mapping"SEO keyword mapping assigns specific keywords to individual pages on your website. This ensures each page is optimized for a targeted term, improving search visibility and site structure."

SEO keyword planning"SEO keyword planning involves researching and organizing keywords to guide your content strategy. By carefully selecting keywords, you can improve rankings, attract more traffic, and achieve your marketing goals."

SEO keywords"SEO keywords are the specific words and phrases that users enter into search engines. SEO Audit . By targeting the right keywords, businesses can improve their search rankings, attract more visitors, and connect with their target audience more effectively."

SEO knowledge sharing"SEO knowledge sharing involves providing educational content, webinars, or guides to help others understand optimization best practices. By sharing insights, businesses can establish themselves as thought leaders, build credibility, and attract more engagement."

SEO marketing"SEO marketing focuses on optimizing a websites visibility in search engine results pages. By targeting relevant keywords, creating valuable content, and building quality backlinks, businesses can attract more visitors, generate leads, and drive long-term growth."

SEO meaning"SEO, or Search Engine Optimization, is the practice of improving a websites visibility in search engine results. By optimizing on-page elements, creating valuable content, and building credible backlinks, businesses can achieve higher rankings, attract organic traffic, and grow their online presence."

SEO North Sydney"SEO services in North Sydney help businesses improve their local visibility, attract more customers, and grow their online presence. comprehensive SEO Packages Sydney services. By targeting relevant keywords, optimizing local listings, and leveraging data-driven insights, these services deliver measurable improvements and long-term success."

SEO outreach"SEO outreach involves contacting influencers, bloggers, and website owners to promote content, build backlinks, and improve brand visibility. By building relationships and earning quality links, businesses can enhance their sites authority and improve search rankings."

SEO package Australia"SEO packages in Australia offer businesses a range of services designed to boost search rankings and increase visibility. These packages typically include keyword research, technical audits, content optimization, and link building, all tailored to deliver measurable results."

SEO package Sydney"An SEO package in Sydney offers a comprehensive approach to improving website performance. Typically including audits, keyword research, content creation, and link building, these packages help businesses achieve better search rankings and drive more traffic."

SEO packages"SEO packages offer businesses a comprehensive set of services to improve their search rankings.

SEO packages Australia"SEO packages in Australia offer comprehensive solutions for businesses looking to improve their online presence. From site audits to content optimization and link building, these packages provide a clear roadmap to achieving better search rankings and driving more organic traffic."

SEO packages Sydney"SEO packages in Sydney provide local businesses with comprehensive solutions to improve search rankings and increase visibility. These packages often include keyword research, on-page optimization, content creation, and link building to achieve measurable results."

SEO Parramatta"SEO services in Parramatta cater to businesses operating in the western Sydney region. By focusing on local keywords, competitor analysis, and region-specific content, these services help companies in Parramatta boost their online visibility, attract more local customers, and grow their brand presence."

SEO Parramatta"SEO services in Parramatta help local businesses improve their online visibility, attract more customers, and strengthen their presence in the western Sydney region. By targeting location-based keywords, optimizing local listings, and creating geo-targeted content, these services deliver effective results."

SEO Parramatta"SEO services in Parramatta help local businesses improve their online visibility, attract more customers, and strengthen their presence in the western Sydney region. By targeting location-based keywords, optimizing local listings, and creating geo-targeted content, these services deliver effective results."

|

|

|

Screenshot

The Main Page of the English Wikipedia running an alpha version of MediaWiki 1.40

|

|

| Original author(s) | |

|---|---|

| Developer(s) | Wikimedia Foundation |

| Initial release | January 25, 2002 |

| Stable release |

1.43.0[1]

|

| Repository | |

| Written in | PHP[2] |

| Operating system | Windows, macOS, Linux, FreeBSD, OpenBSD, Solaris |

| Size | 79.05 MiB (compressed) |

| Available in | 459[3] languages |

| Type | Wiki software |

| License | GPLv2+[4] |

| Website | mediawiki |

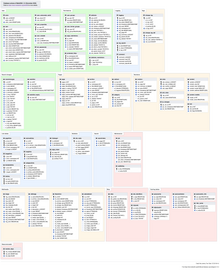

MediaWiki is free and open-source wiki software originally developed by Magnus Manske for use on Wikipedia on January 25, 2002, and further improved by Lee Daniel Crocker,[5][6] after which development has been coordinated by the Wikimedia Foundation. It powers several wiki hosting websites across the Internet, as well as most websites hosted by the Wikimedia Foundation including Wikipedia, Wiktionary, Wikimedia Commons, Wikiquote, Meta-Wiki and Wikidata, which define a large part of the set requirements for the software.[7] Besides its usage on Wikimedia sites, MediaWiki has been used as a knowledge management and content management system on websites such as Fandom, wikiHow and major internal installations like Intellipedia and Diplopedia.

MediaWiki is written in the PHP programming language and stores all text content into a database. The software is optimized to efficiently handle large projects, which can have terabytes of content and hundreds of thousands of views per second.[7][8] Because Wikipedia is one of the world's largest and most visited websites, achieving scalability through multiple layers of caching and database replication has been a major concern for developers. Another major aspect of MediaWiki is its internationalization; its interface is available in more than 400 languages.[9] The software has hundreds of configuration settings[10] and more than 1,000 extensions available for enabling various features to be added or changed.[11]

MediaWiki provides a rich core feature set and a mechanism to attach extensions to provide additional functionality.

Due to the strong emphasis on multilingualism in the Wikimedia projects, internationalization and localization has received significant attention by developers. The user interface has been fully or partially translated into more than 400 languages on translatewiki.net,[9] and can be further customized by site administrators (the entire interface is editable through the wiki).

Several extensions, most notably those collected in the MediaWiki Language Extension Bundle, are designed to further enhance the multilingualism and internationalization of MediaWiki.

Installation of MediaWiki requires that the user have administrative privileges on a server running both PHP and a compatible type of SQL database. Some users find that setting up a virtual host is helpful if the majority of one's site runs under a framework (such as Zope or Ruby on Rails) that is largely incompatible with MediaWiki.[12] Cloud hosting can eliminate the need to deploy a new server.[13]

An installation PHP script is accessed via a web browser to initialize the wiki's settings. It prompts the user for a minimal set of required parameters, leaving further changes, such as enabling uploads,[14] adding a site logo,[15] and installing extensions, to be made by modifying configuration settings contained in a file called LocalSettings.php.[16] Some aspects of MediaWiki can be configured through special pages or by editing certain pages; for instance, abuse filters can be configured through a special page,[17] and certain gadgets can be added by creating JavaScript pages in the MediaWiki namespace.[18] The MediaWiki community publishes a comprehensive installation guide.[19]

One of the earliest differences between MediaWiki (and its predecessor, UseModWiki) and other wiki engines was the use of "free links" instead of CamelCase. When MediaWiki was created, it was typical for wikis to require text like "WorldWideWeb" to create a link to a page about the World Wide Web; links in MediaWiki, on the other hand, are created by surrounding words with double square brackets, and any spaces between them are left intact, e.g. [[World Wide Web]]. This change was logical for the purpose of creating an encyclopedia, where accuracy in titles is important.

MediaWiki uses an extensible[20] lightweight wiki markup designed to be easier to use and learn than HTML. Tools exist for converting content such as tables between MediaWiki markup and HTML.[21] Efforts have been made to create a MediaWiki markup spec, but a consensus seems to have been reached that Wikicode requires context-sensitive grammar rules.[22][23] The following side-by-side comparison illustrates the differences between wiki markup and HTML:

| MediaWiki syntax (the "behind the scenes" code used to add formatting to text) |

HTML equivalent (another type of "behind the scenes" code used to add formatting to text) |

Rendered output (seen onscreen by a site viewer) |

|---|---|---|

====A dialogue====

"Take some more [[tea]]," the March Hare said to Alice, very earnestly.

"I've had nothing yet," Alice replied in an offended tone: "so I can't take more."

"You mean you can't take ''less''," said the Hatter: "it's '''very''' easy to take ''more'' than nothing."

|

<h4>A dialogue</h4>

<p>"Take some more <a href="/wiki/Tea" title="Tea">tea</a>," the March Hare said to Alice, very earnestly.</p> <br>

<p>"I've had nothing yet," Alice replied in an offended tone: "so I can't take more."</p> <br>

<p>"You mean you can't take <i>less</i>," said the Hatter: "it's <b>very</b> easy to take <i>more</i> than nothing."</p>

|

A dialogue

"Take some more tea," the March Hare said to Alice, very earnestly. "I've had nothing yet," Alice replied in an offended tone: "so I can't take more." "You mean you can't take less," said the Hatter: "it's very easy to take more than nothing." |

(Quotation above from Alice's Adventures in Wonderland by Lewis Carroll)

MediaWiki's default page-editing tools have been described as somewhat challenging to learn.[24] A survey of students assigned to use a MediaWiki-based wiki found that when they were asked an open question about main problems with the wiki, 24% cited technical problems with formatting, e.g. "Couldn't figure out how to get an image in. Can't figure out how to show a link with words; it inserts a number."[25]

To make editing long pages easier, MediaWiki allows the editing of a subsection of a page (as identified by its header). A registered user can also indicate whether or not an edit is minor. Correcting spelling, grammar or punctuation are examples of minor edits, whereas adding paragraphs of new text is an example of a non-minor edit.

Sometimes while one user is editing, a second user saves an edit to the same part of the page. Then, when the first user attempts to save the page, an edit conflict occurs. The second user is then given an opportunity to merge their content into the page as it now exists following the first user's page save.

MediaWiki's user interface has been localized in many different languages. A language for the wiki content itself can also be set, to be sent in the "Content-Language" HTTP header and "lang" HTML attribute.

VisualEditor has its own integrated wikitext editing interface known as 2017 wikitext editor, the older editing interface is known as 2010 wikitext editor.

MediaWiki has an extensible web API (application programming interface) that provides direct, high-level access to the data contained in the MediaWiki databases. Client programs can use the API to log in, get data, and post changes. The API supports thin web-based JavaScript clients and end-user applications (such as vandal-fighting tools). The API can be accessed by the backend of another web site.[26] An extensive Python bot library, Pywikibot,[27] and a popular semi-automated tool called AutoWikiBrowser, also interface with the API.[28] The API is accessed via URLs such as https://en.wikipedia.org/w/api.php?action=query&list=recentchanges. In this case, the query would be asking Wikipedia for information relating to the last 10 edits to the site. One of the perceived advantages of the API is its language independence; it listens for HTTP connections from clients and can send a response in a variety of formats, such as XML, serialized PHP, or JSON.[29] Client code has been developed to provide layers of abstraction to the API.[30]

Among the features of MediaWiki to assist in tracking edits is a Recent Changes feature that provides a list of recent edits to the wiki. This list contains basic information about those edits such as the editing user, the edit summary, the page edited, as well as any tags (e.g. "possible vandalism")[31] added by customizable abuse filters and other extensions to aid in combating unhelpful edits.[32] On more active wikis, so many edits occur that it is hard to track Recent Changes manually. Anti-vandal software, including user-assisted tools,[33] is sometimes employed on such wikis to process Recent Changes items. Server load can be reduced by sending a continuous feed of Recent Changes to an IRC channel that these tools can monitor, eliminating their need to send requests for a refreshed Recent Changes feed to the API.[34][35]

Another important tool is watchlisting. Each logged-in user has a watchlist to which the user can add whatever pages he or she wishes. When an edit is made to one of those pages, a summary of that edit appears on the watchlist the next time it is refreshed.[36] As with the recent changes page, recent edits that appear on the watchlist contain clickable links for easy review of the article history and specific changes made.

There is also the capability to review all edits made by any particular user. In this way, if an edit is identified as problematic, it is possible to check the user's other edits for issues.

MediaWiki allows one to link to specific versions of articles. This has been useful to the scientific community, in that expert peer reviewers could analyse articles, improve them and provide links to the trusted version of that article.[37]

Navigation through the wiki is largely through internal wikilinks. MediaWiki's wikilinks implement page existence detection, in which a link is colored blue if the target page exists on the local wiki and red if it does not. If a user clicks on a red link, they are prompted to create an article with that title. Page existence detection makes it practical for users to create "wikified" articles—that is, articles containing links to other pertinent subjects—without those other articles being yet in existence.

Interwiki links function much the same way as namespaces. A set of interwiki prefixes can be configured to cause, for instance, a page title of wikiquote:Jimbo Wales to direct the user to the Jimbo Wales article on Wikiquote.[38] Unlike internal wikilinks, interwiki links lack page existence detection functionality, and accordingly there is no way to tell whether a blue interwiki link is broken or not.

Interlanguage links are the small navigation links that show up in the sidebar in most MediaWiki skins that connect an article with related articles in other languages within the same Wiki family. This can provide language-specific communities connected by a larger context, with all wikis on the same server or each on its own server.[39]

Previously, Wikipedia used interlanguage links to link an article to other articles on the same topic in other editions of Wikipedia. This was superseded by the launch of Wikidata.[40]

Page tabs are displayed at the top of pages. These tabs allow users to perform actions or view pages that are related to the current page. The available default actions include viewing, editing, and discussing the current page. The specific tabs displayed depend on whether the user is logged into the wiki and whether the user has sysop privileges on the wiki. For instance, the ability to move a page or add it to one's watchlist is usually restricted to logged-in users. The site administrator can add or remove tabs by using JavaScript or installing extensions.[41]

Each page has an associated history page from which the user can access every version of the page that has ever existed and generate diffs between two versions of his choice. Users' contributions are displayed not only here, but also via a "user contributions" option on a sidebar. In a 2004 article, Carl Challborn and Teresa Reimann noted that "While this feature may be a slight deviation from the collaborative, 'ego-less' spirit of wiki purists, it can be very useful for educators who need to assess the contribution and participation of individual student users."[42]

MediaWiki provides many features beyond hyperlinks for structuring content. One of the earliest such features is namespaces. One of Wikipedia's earliest problems had been the separation of encyclopedic content from pages pertaining to maintenance and communal discussion, as well as personal pages about encyclopedia editors. Namespaces are prefixes before a page title (such as "User:" or "Talk:") that serve as descriptors for the page's purpose and allow multiple pages with different functions to exist under the same title. For instance, a page titled "[[The Terminator]]", in the default namespace, could describe the 1984 movie starring Arnold Schwarzenegger, while a page titled "[[User:The Terminator]]" could be a profile describing a user who chooses this name as a pseudonym. More commonly, each namespace has an associated "Talk:" namespace, which can be used to discuss its contents, such as "User talk:" or "Template talk:". The purpose of having discussion pages is to allow content to be separated from discussion surrounding the content.[43][44]

Namespaces can be viewed as folders that separate different basic types of information or functionality. Custom namespaces can be added by the site administrators. There are 16 namespaces by default for content, with 2 "pseudo-namespaces" used for dynamically generated "Special:" pages and links to media files. Each namespace on MediaWiki is numbered: content page namespaces have even numbers and their associated talk page namespaces have odd numbers.[45]

Users can create new categories and add pages and files to those categories by appending one or more category tags to the content text. Adding these tags creates links at the bottom of the page that take the reader to the list of all pages in that category, making it easy to browse related articles.[46] The use of categorization to organize content has been described as a combination of:

In addition to namespaces, content can be ordered using subpages. This simple feature provides automatic breadcrumbs of the pattern [[Page title/Subpage title]] from the page after the slash (in this case, "Subpage title") to the page before the slash (in this case, "Page title").

If the feature is enabled, users can customize their stylesheets and configure client-side JavaScript to be executed with every pageview. On Wikipedia, this has led to a large number of additional tools and helpers developed through the wiki and shared among users. For instance, navigation popups is a custom JavaScript tool that shows previews of articles when the user hovers over links and also provides shortcuts for common maintenance tasks.[48]

The entire MediaWiki user interface can be edited through the wiki itself by users with the necessary permissions (typically called "administrators"). This is done through a special namespace with the prefix "MediaWiki:", where each page title identifies a particular user interface message. Using an extension,[49] it is also possible for a user to create personal scripts, and to choose whether certain sitewide scripts should apply to them by toggling the appropriate options in the user preferences page.

The "MediaWiki:" namespace was originally also used for creating custom text blocks that could then be dynamically loaded into other pages using a special syntax. This content was later moved into its own namespace, "Template:".

Templates are text blocks that can be dynamically loaded inside another page whenever that page is requested. The template is a special link in double curly brackets (for example "date=October 2018"), which calls the template (in this case located at Template:Disputed) to load in place of the template call.

Templates are structured documents containing attribute–value pairs. They are defined with parameters, to which are assigned values when transcluded on an article page. The name of the parameter is delimited from the value by an equals sign. A class of templates known as infoboxes is used on Wikipedia to collect and present a subset of information about its subject, usually on the top (mobile view) or top right-hand corner (desktop view) of the document.

Pages in other namespaces can also be transcluded as templates. In particular, a page in the main namespace can be transcluded by prefixing its title with a colon; for example, :MediaWiki transcludes the article "MediaWiki" from the main namespace. Also, it is possible to mark the portions of a page that should be transcluded in several ways, the most basic of which are:[50]

<noinclude>...</noinclude>, which marks content that is not to be transcluded;<includeonly>...</includeonly>, which marks content that is not rendered unless it is transcluded;<onlyinclude>...</onlyinclude>, which marks content that is to be the only content transcluded.A related method, called template substitution (called by adding subst: at the beginning of a template link) inserts the contents of the template into the target page (like a copy and paste operation), instead of loading the template contents dynamically whenever the page is loaded. This can lead to inconsistency when using templates, but may be useful in certain cases, and in most cases requires fewer server resources (the actual amount of savings can vary depending on wiki configuration and the complexity of the template).

Templates have found many different uses. Templates enable users to create complex table layouts that are used consistently across multiple pages, and where only the content of the tables gets inserted using template parameters. Templates are frequently used to identify problems with a Wikipedia article by putting a template in the article. This template then outputs a graphical box stating that the article content is disputed or in need of some other attention, and also categorize it so that articles of this nature can be located. Templates are also used on user pages to send users standard messages welcoming them to the site,[51] giving them awards for outstanding contributions,[52][53] warning them when their behavior is considered inappropriate,[54] notifying them when they are blocked from editing,[55] and so on.

MediaWiki offers flexibility in creating and defining user groups. For instance, it would be possible to create an arbitrary "ninja" group that can block users and delete pages, and whose edits are hidden by default in the recent changes log. It is also possible to set up a group of "autoconfirmed" users that one becomes a member of after making a certain number of edits and waiting a certain number of days.[56] Some groups that are enabled by default are bureaucrats and sysops. Bureaucrats have the power to change other users' rights. Sysops have power over page protection and deletion and the blocking of users from editing. MediaWiki's available controls on editing rights have been deemed sufficient for publishing and maintaining important documents such as a manual of standard operating procedures in a hospital.[57]

MediaWiki comes with a basic set of features related to restricting access, but its original and ongoing design is driven by functions that largely relate to content, not content segregation. As a result, with minimal exceptions (related to specific tools and their related "Special" pages), page access control has never been a high priority in core development and developers have stated that users requiring secure user access and authorization controls should not rely on MediaWiki, since it was never designed for these kinds of situations. For instance, it is extremely difficult to create a wiki where only certain users can read and access some pages.[58] Here, wiki engines like Foswiki, MoinMoin and Confluence provide more flexibility by supporting advanced security mechanisms like access control lists.

The MediaWiki codebase contains various hooks using callback functions to add additional PHP code in an extensible way. This allows developers to write extensions without necessarily needing to modify the core or having to submit their code for review. Installing an extension typically consists of adding a line to the configuration file, though in some cases additional changes such as database updates or core patches are required.

Five main extension points were created to allow developers to add features and functionalities to MediaWiki. Hooks are run every time a certain event happens; for instance, the ArticleSaveComplete hook occurs after a save article request has been processed.[59] This can be used, for example, by an extension that notifies selected users whenever a page edit occurs on the wiki from new or anonymous users.[60] New tags can be created to process data with opening and closing tags (<newtag>...</newtag>).[61] Parser functions can be used to create a new command (...).[62] New special pages can be created to perform a specific function. These pages are dynamically generated. For example, a special page might show all pages that have one or more links to an external site or it might create a form providing user submitted feedback.[63] Skins allow users to customize the look and feel of MediaWiki.[64] A minor extension point allows the use of Amazon S3 to host image files.[65]

Among the most popular extensions is a parser function extension, ParserFunctions, which allows different content to be rendered based on the result of conditional statements.[66] These conditional statements can perform functions such as evaluating whether a parameter is empty, comparing strings, evaluating mathematical expressions, and returning one of two values depending on whether a page exists. It was designed as a replacement for a notoriously inefficient template called Qif.[67] Schindler recounts the history of the ParserFunctions extension as follows:[68]

In 2006 some Wikipedians discovered that through an intricate and complicated interplay of templating features and CSS they could create conditional wiki text, i.e. text that was displayed if a template parameter had a specific value. This included repeated calls of templates within templates, which bogged down the performance of the whole system. The developers faced the choice of either disallowing the spreading of an obviously desired feature by detecting such usage and explicitly disallowing it within the software or offering an efficient alternative. The latter was done by Tim Starling, who announced the introduction of parser functions, wiki text that calls functions implemented in the underlying software. At first, only conditional text and the computation of simple mathematical expressions were implemented, but this already increased the possibilities for wiki editors enormously. With time further parser functions were introduced, finally leading to a framework that allowed the simple writing of extension functions to add arbitrary functionalities, like e.g. geo-coding services or widgets. This time the developers were clearly reacting to the demand of the community, being forced either to fight the solution of the issue that the community had (i.e. conditional text), or offer an improved technical implementation to replace the previous practice and achieve an overall better performance.

Another parser functions extension, StringFunctions, was developed to allow evaluation of string length, string position, and so on. Wikimedia communities, having created awkward workarounds to accomplish the same functionality,[69] clamored for it to be enabled on their projects.[70] Much of its functionality was eventually integrated into the ParserFunctions extension,[71] albeit disabled by default and accompanied by a warning from Tim Starling that enabling string functions would allow users "to implement their own parsers in the ugliest, most inefficient programming language known to man: MediaWiki wikitext with ParserFunctions."[72]

Since 2012 an extension, Scribunto, has existed that allows for the creation of "modules"—wiki pages written in the scripting language Lua—which can then be run within templates and standard wiki pages. Scribunto has been installed on Wikipedia and other Wikimedia sites since 2013 and is used heavily on those sites. Scribunto code runs significantly faster than corresponding wikitext code using ParserFunctions.[73]

Another very popular extension is a citation extension that enables footnotes to be added to pages using inline references.[74] This extension has, however, been criticized for being difficult to use and requiring the user to memorize complex syntax. A gadget called RefToolbar attempts to make it easier to create citations using common templates. MediaWiki has some extensions that are well-suited for academia, such as mathematics extensions[75] and an extension that allows molecules to be rendered in 3D.[76]

A generic Widgets extension exists that allows MediaWiki to integrate with virtually anything. Other examples of extensions that could improve a wiki are category suggestion extensions[77] and extensions for inclusion of Flash Videos,[78] YouTube videos,[79] and RSS feeds.[80] Metavid, a site that archives video footage of the U.S. Senate and House floor proceedings, was created using code extending MediaWiki into the domain of collaborative video authoring.[81]

There are many spambots that search the web for MediaWiki installations and add linkspam to them, despite the fact that MediaWiki uses the nofollow attribute to discourage such attempts at search engine optimization.[82] Part of the problem is that third party republishers, such as mirrors, may not independently implement the nofollow tag on their websites, so marketers can still get PageRank benefit by inserting links into pages when those entries appear on third party websites.[83] Anti-spam extensions have been developed to combat the problem by introducing CAPTCHAs,[84] blacklisting certain URLs,[85] and allowing bulk deletion of pages recently added by a particular user.[86]

MediaWiki comes pre-installed with a standard text-based search. Extensions exist to let MediaWiki use more sophisticated third-party search engines, including Elasticsearch (which since 2014 has been in use on Wikipedia), Lucene[87] and Sphinx.[88]

Various MediaWiki extensions have also been created to allow for more complex, faceted search, on both data entered within the wiki and on metadata such as pages' revision history.[89][90] Semantic MediaWiki is one such extension.[91][92]

Various extensions to MediaWiki support rich content generated through specialized syntax. These include mathematical formulas using LaTeX, graphical timelines over mathematical plotting, musical scores and Egyptian hieroglyphs.

The software supports a wide variety of uploaded media files, and allows image galleries and thumbnails to be generated with relative ease. There is also support for Exif metadata. MediaWiki operates the Wikimedia Commons, one of the largest free content media archives.

For WYSIWYG editing, VisualEditor is available to use in MediaWiki which simplifying editing process for editors and has been bundled since MediaWiki 1.35.[93] Other extensions exist for handling WYSIWYG editing to different degrees.[94]

MediaWiki can use either the MySQL/MariaDB, PostgreSQL or SQLite relational database management system. Support for Oracle Database and Microsoft SQL Server has been dropped since MediaWiki 1.34.[95] A MediaWiki database contains several dozen tables, including a page table that contains page titles, page ids, and other metadata;[96] and a revision table to which is added a new row every time an edit is made, containing the page id, a brief textual summary of the change performed, the user name of the article editor (or its IP address the case of an unregistered user) and a timestamp.[97][98]

In a 4½ year period prior to 2008, the MediaWiki database had 170 schema versions.[99] Possibly the largest schema change was done in 2005 with MediaWiki 1.5, when the storage of metadata was separated from that of content, to improve performance flexibility. When this upgrade was applied to Wikipedia, the site was locked for editing, and the schema was converted to the new version in about 22 hours. Some software enhancement proposals, such as a proposal to allow sections of articles to be watched via watchlist, have been rejected because the necessary schema changes would have required excessive Wikipedia downtime.[100]

Because it is used to run one of the highest-traffic sites on the Web, Wikipedia, MediaWiki's performance and scalability have been highly optimized.[101] MediaWiki supports Squid, load-balanced database replication, client-side caching, memcached or table-based caching for frequently accessed processing of query results, a simple static file cache, feature-reduced operation, revision compression, and a job queue for database operations. MediaWiki developers have attempted to optimize the software by avoiding expensive algorithms, database queries, etc., caching every result that is expensive and has temporal locality of reference, and focusing on the hot spots in the code through profiling.[102]

MediaWiki code is designed to allow for data to be written to a read-write database and read from read-only databases, although the read-write database can be used for some read operations if the read-only databases are not yet up to date. Metadata, such as article revision history, article relations (links, categories etc.), user accounts and settings can be stored in core databases and cached; the actual revision text, being more rarely used, can be stored as append-only blobs in external storage. The software is suitable for the operation of large-scale wiki farms such as Wikimedia, which had about 800 wikis as of August 2011. However, MediaWiki comes with no built-in GUI to manage such installations.

Empirical evidence shows most revisions in MediaWiki databases tend to differ only slightly from previous revisions. Therefore, subsequent revisions of an article can be concatenated and then compressed, achieving very high data compression ratios of up to 100×.[102]

For more information on the architecture, such as how it stores wikitext and assembles a page, see External links.

The parser serves as the de facto standard for the MediaWiki syntax, as no formal syntax has been defined. Due to this lack of a formal definition, it has been difficult to create WYSIWYG editors for MediaWiki, although several WYSIWYG extensions do exist, including the popular VisualEditor.

MediaWiki is not designed to be a suitable replacement for dedicated online forum or blogging software,[103] although extensions do exist to allow for both of these.[104][105]

It is common for new MediaWiki users to make certain mistakes, such as forgetting to sign posts with four tildes (~~~~),[106] or manually entering a plaintext signature,[107] due to unfamiliarity with the idiosyncratic particulars involved in communication on MediaWiki discussion pages. On the other hand, the format of these discussion pages has been cited as a strength by one educator, who stated that it provides more fine-grain capabilities for discussion than traditional threaded discussion forums. For example, instead of 'replying' to an entire message, the participant in a discussion can create a hyperlink to a new wiki page on any word from the original page. Discussions are easier to follow since the content is available via hyperlinked wiki page, rather than a series of reply messages on a traditional threaded discussion forum. However, except in few cases, students were not using this capability, possibly because of their familiarity with the traditional linear discussion style and a lack of guidance on how to make the content more 'link-rich'.[108]

MediaWiki by default has little support for the creation of dynamically assembled documents, or pages that aggregate data from other pages. Some research has been done on enabling such features directly within MediaWiki.[109] The Semantic MediaWiki extension provides these features. It is not in use on Wikipedia, but in more than 1,600 other MediaWiki installations.[110] The Wikibase Repository and Wikibase Repository client are however implemented in Wikidata and Wikipedia respectively, and to some extent provides semantic web features, and linking of centrally stored data to infoboxes in various Wikipedia articles.

Upgrading MediaWiki is usually fully automated, requiring no changes to the site content or template programming. Historically troubles have been encountered when upgrading from significantly older versions.[111]

MediaWiki developers have enacted security standards, both for core code and extensions.[112] SQL queries and HTML output are usually done through wrapper functions that handle validation, escaping, filtering for prevention of cross-site scripting and SQL injection.[113] Many security issues have had to be patched after a MediaWiki version release,[114] and accordingly MediaWiki.org states, "The most important security step you can take is to keep your software up to date" by subscribing to the announcement mailing list and installing security updates that are announced.[115]

Support for MediaWiki users consists of:

MediaWiki is free and open-source and is distributed under the terms of the GNU General Public License version 2 or any later version. Its documentation, located at its official website at www.mediawiki.org, is released under the Creative Commons BY-SA 4.0 license, with a set of help pages intended to be freely copied into fresh wiki installations and/or distributed with MediaWiki software in the public domain instead to eliminate legal issues for wikis with other licenses.[119][120] MediaWiki's development has generally favored the use of open-source media formats.[121]

MediaWiki has an active volunteer community for development and maintenance. MediaWiki developers are spread around the world, though with a majority in the United States and Europe. Face-to-face meetings and programming sessions for MediaWiki developers have been held once or several times a year since 2004.[122]

Anyone can submit patches to the project's Git/Gerrit repository.[123] There are also paid programmers who primarily develop projects for the Wikimedia Foundation. MediaWiki developers participate in the Google Summer of Code by facilitating the assignment of mentors to students wishing to work on MediaWiki core and extension projects.[124] During the year prior to November 2012, there were about two hundred developers who had committed changes to the MediaWiki core or extensions.[125] Major MediaWiki releases are generated approximately every six months by taking snapshots of the development branch, which is kept continuously in a runnable state;[126] minor releases, or point releases, are issued as needed to correct bugs (especially security problems). MediaWiki is developed on a continuous integration development model, in which software changes are pushed live to Wikimedia sites on regular basis.[126] MediaWiki also has a public bug tracker, phabricator.wikimedia.org, which runs Phabricator. The site is also used for feature and enhancement requests.

When Wikipedia was launched in January 2001, it ran on an existing wiki software system, UseModWiki. UseModWiki is written in the Perl programming language, and stores all wiki pages in text (.txt) files. This software soon proved to be limiting, in both functionality and performance. In mid-2001, Magnus Manske—a developer and student at the University of Cologne, as well as a Wikipedia editor—began working on new software that would replace UseModWiki, specifically designed for use by Wikipedia. This software was written in the PHP scripting language, and stored all of its information in a MySQL database. The new software was largely developed by August 24, 2001, and a test wiki for it was established shortly thereafter.

The first full implementation of this software was the new Meta Wikipedia on November 9, 2001. There was a desire to have it implemented immediately on the English-language Wikipedia.[127] However, Manske was apprehensive about any potential bugs harming the nascent website during the period of the final exams he had to complete immediately prior to Christmas;[128] this led to the launch on the English-language Wikipedia being delayed until January 25, 2002. The software was then, gradually, deployed on all the Wikipedia language sites of that time. This software was referred to as "the PHP script" and as "phase II", with the name "phase I", retroactively given to the use of UseModWiki.

Increasing usage soon caused load problems to arise again, and soon after, another rewrite of the software began; this time being done by Lee Daniel Crocker, which became known as "phase III". This new software was also written in PHP, with a MySQL backend, and kept the basic interface of the phase II software, but with the added functionality of a wider scalability. The "phase III" software went live on Wikipedia in July 2002.

The Wikimedia Foundation was announced on June 20, 2003. In July, Wikipedia contributor Daniel Mayer suggested the name "MediaWiki" for the software, as a play on "Wikimedia".[129] The MediaWiki name was gradually phased in, beginning in August 2003. The name has frequently caused confusion due to its (intentional) similarity to the "Wikimedia" name (which itself is similar to "Wikipedia").[130] The first version of MediaWiki, 1.1, was released in December 2003.

The old product logo was created by Erik Möller, using a flower photograph taken by Florence Nibart-Devouard, and was originally submitted to the logo contest for a new Wikipedia logo, held from July 20 to August 27, 2003.[131][132] The logo came in third place, and was chosen to represent MediaWiki rather than Wikipedia, with the second place logo being used for the Wikimedia Foundation.[133] The double square brackets ([[ ]]) symbolize the syntax MediaWiki uses for creating hyperlinks to other wiki pages; while the sunflower represents the diversity of content on Wikipedia, its constant growth, and the wilderness.[134]

Later, Brooke Vibber, the chief technical officer of the Wikimedia Foundation,[135] took up the role of release manager.[136][101]

Major milestones in MediaWiki's development have included: the categorization system (2004); parser functions, (2006); Flagged Revisions, (2008);[68] the "ResourceLoader", a delivery system for CSS and JavaScript (2011);[137] and the VisualEditor, a "what you see is what you get" (WYSIWYG) editing platform (2013).[138]

The contest of designing a new logo was initiated on June 22, 2020, as the old logo was a bitmap image and had "high details", leading to problems when rendering at high and low resolutions, respectively. After two rounds of voting, the new and current MediaWiki logo designed by Serhio Magpie was selected on October 24, 2020, and officially adopted on April 1, 2021.[139]

MediaWiki's most famous use has been in Wikipedia and, to a lesser degree, the Wikimedia Foundation's other projects. Fandom, a wiki hosting service formerly known as Wikia, runs on MediaWiki. Other public wikis that run on MediaWiki include wikiHow and SNPedia. WikiLeaks began as a MediaWiki-based site, but is no longer a wiki.

A number of alternative wiki encyclopedias to Wikipedia run on MediaWiki, including Citizendium, Metapedia, Scholarpedia and Conservapedia. MediaWiki is also used internally by a large number of companies, including Novell and Intel.[140][141]

Notable usages of MediaWiki within governments include Intellipedia, used by the United States Intelligence Community, Diplopedia, used by the United States Department of State, and milWiki, a part of milSuite used by the United States Department of Defense. United Nations agencies such as the United Nations Development Programme and INSTRAW chose to implement their wikis using MediaWiki, because "this software runs Wikipedia and is therefore guaranteed to be thoroughly tested, will continue to be developed well into the future, and future technicians on these wikis will be more likely to have exposure to MediaWiki than any other wiki software."[142]

The Free Software Foundation uses MediaWiki to implement the LibrePlanet site.[143]

Users of online collaboration software are familiar with MediaWiki's functions and layout due to its noted use on Wikipedia. A 2006 overview of social software in academia observed that "Compared to other wikis, MediaWiki is also fairly aesthetically pleasing, though simple, and has an easily customized side menu and stylesheet."[144] However, in one assessment in 2006, Confluence was deemed to be a superior product due to its very usable API and ability to better support multiple wikis.[76]

A 2009 study at the University of Hong Kong compared TWiki to MediaWiki. The authors noted that TWiki has been considered as a collaborative tool for the development of educational papers and technical projects, whereas MediaWiki's most noted use is on Wikipedia. Although both platforms allow discussion and tracking of progress, TWiki has a "Report" part that MediaWiki lacks. Students perceived MediaWiki as being easier to use and more enjoyable than TWiki. When asked whether they recommended using MediaWiki for knowledge management course group project, 15 out of 16 respondents expressed their preference for MediaWiki giving answers of great certainty, such as "of course", "for sure".[145] TWiki and MediaWiki both have flexible plug-in architecture.[146]

A 2009 study that compared students' experience with MediaWiki to that with Google Docs found that students gave the latter a much higher rating on user-friendly layout.[147]

A 2021 study conducted by the Brazilian Nuclear Engineering Institute compared a MediaWiki-based knowledge management system against two others that were based on DSpace and Open Journal Systems, respectively.[148] It highlighted ease of use as an advantage of the MediaWiki-based system, noting that because the Wikimedia Foundation had been developing MediaWiki for a site aimed at the general public (Wikipedia), "its user interface was designed to be more user-friendly from start, and has received large user feedback over a long time", in contrast to DSpace's and OJS's focus on niche audiences.[148]

488 languages (not including languages that are supported but have no translations)

|

|

Google Search on desktop

|

|

|

Type of site

|

Web search engine |

|---|---|

| Available in | 149 languages |

| Owner | |

| Revenue | Google Ads |

| URL | google |

| IPv6 support | Yes[1] |

| Commercial | Yes |

| Registration | Optional |

| Launched |

|

| Current status | Online |

| Written in | |

Google Search (also known simply as Google or Google.com) is a search engine operated by Google. It allows users to search for information on the Web by entering keywords or phrases. Google Search uses algorithms to analyze and rank websites based on their relevance to the search query. It is the most popular search engine worldwide.

Google Search is the most-visited website in the world. As of 2020, Google Search has a 92% share of the global search engine market.[3] Approximately 26.75% of Google's monthly global traffic comes from the United States, 4.44% from India, 4.4% from Brazil, 3.92% from the United Kingdom and 3.84% from Japan according to data provided by Similarweb.[4]

The order of search results returned by Google is based, in part, on a priority rank system called "PageRank". Google Search also provides many different options for customized searches, using symbols to include, exclude, specify or require certain search behavior, and offers specialized interactive experiences, such as flight status and package tracking, weather forecasts, currency, unit, and time conversions, word definitions, and more.

The main purpose of Google Search is to search for text in publicly accessible documents offered by web servers, as opposed to other data, such as images or data contained in databases. It was originally developed in 1996 by Larry Page, Sergey Brin, and Scott Hassan.[5][6][7] The search engine would also be set up in the garage of Susan Wojcicki's Menlo Park home.[8] In 2011, Google introduced "Google Voice Search" to search for spoken, rather than typed, words.[9] In 2012, Google introduced a semantic search feature named Knowledge Graph.

Analysis of the frequency of search terms may indicate economic, social and health trends.[10] Data about the frequency of use of search terms on Google can be openly inquired via Google Trends and have been shown to correlate with flu outbreaks and unemployment levels, and provide the information faster than traditional reporting methods and surveys. As of mid-2016, Google's search engine has begun to rely on deep neural networks.[11]

In August 2024, a US judge in Virginia ruled that Google's search engine held an illegal monopoly over Internet search.[12][13] The court found that Google maintained its market dominance by paying large amounts to phone-makers and browser-developers to make Google its default search engine.[13]

Google indexes hundreds of terabytes of information from web pages.[14] For websites that are currently down or otherwise not available, Google provides links to cached versions of the site, formed by the search engine's latest indexing of that page.[15] Additionally, Google indexes some file types, being able to show users PDFs, Word documents, Excel spreadsheets, PowerPoint presentations, certain Flash multimedia content, and plain text files.[16] Users can also activate "SafeSearch", a filtering technology aimed at preventing explicit and pornographic content from appearing in search results.[17]

Despite Google search's immense index, sources generally assume that Google is only indexing less than 5% of the total Internet, with the rest belonging to the deep web, inaccessible through its search tools.[14][18][19]

In 2012, Google changed its search indexing tools to demote sites that had been accused of piracy.[20] In October 2016, Gary Illyes, a webmaster trends analyst with Google, announced that the search engine would be making a separate, primary web index dedicated for mobile devices, with a secondary, less up-to-date index for desktop use. The change was a response to the continued growth in mobile usage, and a push for web developers to adopt a mobile-friendly version of their websites.[21][22] In December 2017, Google began rolling out the change, having already done so for multiple websites.[23]

In August 2009, Google invited web developers to test a new search architecture, codenamed "Caffeine", and give their feedback. The new architecture provided no visual differences in the user interface, but added significant speed improvements and a new "under-the-hood" indexing infrastructure. The move was interpreted in some quarters as a response to Microsoft's recent release of an upgraded version of its own search service, renamed Bing, as well as the launch of Wolfram Alpha, a new search engine based on "computational knowledge".[24][25] Google announced completion of "Caffeine" on June 8, 2010, claiming 50% fresher results due to continuous updating of its index.[26]

With "Caffeine", Google moved its back-end indexing system away from MapReduce and onto Bigtable, the company's distributed database platform.[27][28]

In August 2018, Danny Sullivan from Google announced a broad core algorithm update. As per current analysis done by the industry leaders Search Engine Watch and Search Engine Land, the update was to drop down the medical and health-related websites that were not user friendly and were not providing good user experience. This is why the industry experts named it "Medic".[29]

Google reserves very high standards for YMYL (Your Money or Your Life) pages. This is because misinformation can affect users financially, physically, or emotionally. Therefore, the update targeted particularly those YMYL pages that have low-quality content and misinformation. This resulted in the algorithm targeting health and medical-related websites more than others. However, many other websites from other industries were also negatively affected.[30]

By 2012, it handled more than 3.5 billion searches per day.[31] In 2013 the European Commission found that Google Search favored Google's own products, instead of the best result for consumers' needs.[32] In February 2015 Google announced a major change to its mobile search algorithm which would favor mobile friendly over other websites. Nearly 60% of Google searches come from mobile phones. Google says it wants users to have access to premium quality websites. Those websites which lack a mobile-friendly interface would be ranked lower and it is expected that this update will cause a shake-up of ranks. Businesses who fail to update their websites accordingly could see a dip in their regular websites traffic.[33]

Google's rise was largely due to a patented algorithm called PageRank which helps rank web pages that match a given search string.[34] When Google was a Stanford research project, it was nicknamed BackRub because the technology checks backlinks to determine a site's importance. Other keyword-based methods to rank search results, used by many search engines that were once more popular than Google, would check how often the search terms occurred in a page, or how strongly associated the search terms were within each resulting page. The PageRank algorithm instead analyzes human-generated links assuming that web pages linked from many important pages are also important. The algorithm computes a recursive score for pages, based on the weighted sum of other pages linking to them. PageRank is thought to correlate well with human concepts of importance. In addition to PageRank, Google, over the years, has added many other secret criteria for determining the ranking of resulting pages. This is reported to comprise over 250 different indicators,[35][36] the specifics of which are kept secret to avoid difficulties created by scammers and help Google maintain an edge over its competitors globally.

PageRank was influenced by a similar page-ranking and site-scoring algorithm earlier used for RankDex, developed by Robin Li in 1996. Larry Page's patent for PageRank filed in 1998 includes a citation to Li's earlier patent. Li later went on to create the Chinese search engine Baidu in 2000.[37][38]

In a potential hint of Google's future direction of their Search algorithm, Google's then chief executive Eric Schmidt, said in a 2007 interview with the Financial Times: "The goal is to enable Google users to be able to ask the question such as 'What shall I do tomorrow?' and 'What job shall I take?'".[39] Schmidt reaffirmed this during a 2010 interview with The Wall Street Journal: "I actually think most people don't want Google to answer their questions, they want Google to tell them what they should be doing next."[40]

Because Google is the most popular search engine, many webmasters attempt to influence their website's Google rankings. An industry of consultants has arisen to help websites increase their rankings on Google and other search engines. This field, called search engine optimization, attempts to discern patterns in search engine listings, and then develop a methodology for improving rankings to draw more searchers to their clients' sites. Search engine optimization encompasses both "on page" factors (like body copy, title elements, H1 heading elements and image alt attribute values) and Off Page Optimization factors (like anchor text and PageRank). The general idea is to affect Google's relevance algorithm by incorporating the keywords being targeted in various places "on page", in particular the title element and the body copy (note: the higher up in the page, presumably the better its keyword prominence and thus the ranking). Too many occurrences of the keyword, however, cause the page to look suspect to Google's spam checking algorithms. Google has published guidelines for website owners who would like to raise their rankings when using legitimate optimization consultants.[41] It has been hypothesized, and, allegedly, is the opinion of the owner of one business about which there have been numerous complaints, that negative publicity, for example, numerous consumer complaints, may serve as well to elevate page rank on Google Search as favorable comments.[42] The particular problem addressed in The New York Times article, which involved DecorMyEyes, was addressed shortly thereafter by an undisclosed fix in the Google algorithm. According to Google, it was not the frequently published consumer complaints about DecorMyEyes which resulted in the high ranking but mentions on news websites of events which affected the firm such as legal actions against it. Google Search Console helps to check for websites that use duplicate or copyright content.[43]

In 2013, Google significantly upgraded its search algorithm with "Hummingbird". Its name was derived from the speed and accuracy of the hummingbird.[44] The change was announced on September 26, 2013, having already been in use for a month.[45] "Hummingbird" places greater emphasis on natural language queries, considering context and meaning over individual keywords.[44] It also looks deeper at content on individual pages of a website, with improved ability to lead users directly to the most appropriate page rather than just a website's homepage.[46] The upgrade marked the most significant change to Google search in years, with more "human" search interactions[47] and a much heavier focus on conversation and meaning.[44] Thus, web developers and writers were encouraged to optimize their sites with natural writing rather than forced keywords, and make effective use of technical web development for on-site navigation.[48]

In 2023, drawing on internal Google documents disclosed as part of the United States v. Google LLC (2020) antitrust case, technology reporters claimed that Google Search was "bloated and overmonetized"[49] and that the "semantic matching" of search queries put advertising profits before quality.[50] Wired withdrew Megan Gray's piece after Google complained about alleged inaccuracies, while the author reiterated that «As stated in court, "A goal of Project Mercury was to increase commercial queries"».[51]

In March 2024, Google announced a significant update to its core search algorithm and spam targeting, which is expected to wipe out 40 percent of all spam results.[52] On March 20th, it was confirmed that the roll out of the spam update was complete.[53]

On September 10, 2024, the European-based EU Court of Justice found that Google held an illegal monopoly with the way the company showed favoritism to its shopping search, and could not avoid paying €2.4 billion.[54] The EU Court of Justice referred to Google's treatment of rival shopping searches as "discriminatory" and in violation of the Digital Markets Act.[54]

At the top of the search page, the approximate result count and the response time two digits behind decimal is noted. Of search results, page titles and URLs, dates, and a preview text snippet for each result appears. Along with web search results, sections with images, news, and videos may appear.[55] The length of the previewed text snipped was experimented with in 2015 and 2017.[56][57]

"Universal search" was launched by Google on May 16, 2007, as an idea that merged the results from different kinds of search types into one. Prior to Universal search, a standard Google search would consist of links only to websites. Universal search, however, incorporates a wide variety of sources, including websites, news, pictures, maps, blogs, videos, and more, all shown on the same search results page.[58][59] Marissa Mayer, then-vice president of search products and user experience, described the goal of Universal search as "we're attempting to break down the walls that traditionally separated our various search properties and integrate the vast amounts of information available into one simple set of search results.[60]

In June 2017, Google expanded its search results to cover available job listings. The data is aggregated from various major job boards and collected by analyzing company homepages. Initially only available in English, the feature aims to simplify finding jobs suitable for each user.[61][62]

In May 2009, Google announced that they would be parsing website microformats to populate search result pages with "Rich snippets". Such snippets include additional details about results, such as displaying reviews for restaurants and social media accounts for individuals.[63]

In May 2016, Google expanded on the "Rich snippets" format to offer "Rich cards", which, similarly to snippets, display more information about results, but shows them at the top of the mobile website in a swipeable carousel-like format.[64] Originally limited to movie and recipe websites in the United States only, the feature expanded to all countries globally in 2017.[65]

The Knowledge Graph is a knowledge base used by Google to enhance its search engine's results with information gathered from a variety of sources.[66] This information is presented to users in a box to the right of search results.[67] Knowledge Graph boxes were added to Google's search engine in May 2012,[66] starting in the United States, with international expansion by the end of the year.[68] The information covered by the Knowledge Graph grew significantly after launch, tripling its original size within seven months,[69] and being able to answer "roughly one-third" of the 100 billion monthly searches Google processed in May 2016.[70] The information is often used as a spoken answer in Google Assistant[71] and Google Home searches.[72] The Knowledge Graph has been criticized for providing answers without source attribution.[70]

A Google Knowledge Panel[73] is a feature integrated into Google search engine result pages, designed to present a structured overview of entities such as individuals, organizations, locations, or objects directly within the search interface. This feature leverages data from Google's Knowledge Graph,[74] a database that organizes and interconnects information about entities, enhancing the retrieval and presentation of relevant content to users.

The content within a Knowledge Panel[75] is derived from various sources, including Wikipedia and other structured databases, ensuring that the information displayed is both accurate and contextually relevant. For instance, querying a well-known public figure may trigger a Knowledge Panel displaying essential details such as biographical information, birthdate, and links to social media profiles or official websites.

The primary objective of the Google Knowledge Panel is to provide users with immediate, factual answers, reducing the need for extensive navigation across multiple web pages.

In May 2017, Google enabled a new "Personal" tab in Google Search, letting users search for content in their Google accounts' various services, including email messages from Gmail and photos from Google Photos.[76][77]

Google Discover, previously known as Google Feed, is a personalized stream of articles, videos, and other news-related content. The feed contains a "mix of cards" which show topics of interest based on users' interactions with Google, or topics they choose to follow directly.[78] Cards include, "links to news stories, YouTube videos, sports scores, recipes, and other content based on what [Google] determined you're most likely to be interested in at that particular moment."[78] Users can also tell Google they're not interested in certain topics to avoid seeing future updates.

Google Discover launched in December 2016[79] and received a major update in July 2017.[80] Another major update was released in September 2018, which renamed the app from Google Feed to Google Discover, updated the design, and adding more features.[81]

Discover can be found on a tab in the Google app and by swiping left on the home screen of certain Android devices. As of 2019, Google will not allow political campaigns worldwide to target their advertisement to people to make them vote.[82]

At the 2023 Google I/O event in May, Google unveiled Search Generative Experience (SGE), an experimental feature in Google Search available through Google Labs which produces AI-generated summaries in response to search prompts.[83] This was part of Google's wider efforts to counter the unprecedented rise of generative AI technology, ushered by OpenAI's launch of ChatGPT, which sent Google executives to a panic due to its potential threat to Google Search.[84] Google added the ability to generate images in October.[85] At I/O in 2024, the feature was upgraded and renamed AI Overviews.[86]

AI Overviews was rolled out to users in the United States in May 2024.[86] The feature faced public criticism in the first weeks of its rollout after errors from the tool went viral online. These included results suggesting users add glue to pizza or eat rocks,[87] or incorrectly claiming Barack Obama is Muslim.[88] Google described these viral errors as "isolated examples", maintaining that most AI Overviews provide accurate information.[87][89] Two weeks after the rollout of AI Overviews, Google made technical changes and scaled back the feature, pausing its use for some health-related queries and limiting its reliance on social media posts.[90] Scientific American has criticised the system on environmental grounds, as such a search uses 30 times more energy than a conventional one.[91] It has also been criticized for condensing information from various sources, making it less likely for people to view full articles and websites. When it was announced in May 2024, Danielle Coffey, CEO of the News/Media Alliance was quoted as saying "This will be catastrophic to our traffic, as marketed by Google to further satisfy user queries, leaving even less incentive to click through so that we can monetize our content."[92]

In August 2024, AI Overviews were rolled out in the UK, India, Japan, Indonesia, Mexico and Brazil, with local language support.[93] On October 28, 2024, AI Overviews was rolled out to 100 more countries, including Australia and New Zealand.[94]

In March 2025, Google introduced an experimental "AI Mode" within its Search platform, enabling users to input complex, multi-part queries and receive comprehensive, AI-generated responses. This feature leverages Google's advanced Gemini 2.0 model, which enhances the system's reasoning capabilities and supports multimodal inputs, including text, images, and voice.

Initially, AI Mode is available to Google One AI Premium subscribers in the United States, who can access it through the Search Labs platform. This phased rollout allows Google to gather user feedback and refine the feature before a broader release.

The introduction of AI Mode reflects Google's ongoing efforts to integrate advanced AI technologies into its services, aiming to provide users with more intuitive and efficient search experiences.[95][96]

In late June 2011, Google introduced a new look to the Google homepage in order to boost the use of the Google+ social tools.[97]

One of the major changes was replacing the classic navigation bar with a black one. Google's digital creative director Chris Wiggins explains: "We're working on a project to bring you a new and improved Google experience, and over the next few months, you'll continue to see more updates to our look and feel."[98] The new navigation bar has been negatively received by a vocal minority.[99]

In November 2013, Google started testing yellow labels for advertisements displayed in search results, to improve user experience. The new labels, highlighted in yellow color, and aligned to the left of each sponsored link help users differentiate between organic and sponsored results.[100]

On December 15, 2016, Google rolled out a new desktop search interface that mimics their modular mobile user interface. The mobile design consists of a tabular design that highlights search features in boxes. and works by imitating the desktop Knowledge Graph real estate, which appears in the right-hand rail of the search engine result page, these featured elements frequently feature Twitter carousels, People Also Search For, and Top Stories (vertical and horizontal design) modules. The Local Pack and Answer Box were two of the original features of the Google SERP that were primarily showcased in this manner, but this new layout creates a previously unseen level of design consistency for Google results.[101]

Google offers a "Google Search" mobile app for Android and iOS devices.[102] The mobile apps exclusively feature Google Discover and a "Collections" feature, in which the user can save for later perusal any type of search result like images, bookmarks or map locations into groups.[103] Android devices were introduced to a preview of the feed, perceived as related to Google Now, in December 2016,[104] while it was made official on both Android and iOS in July 2017.[105][106]

In April 2016, Google updated its Search app on Android to feature "Trends"; search queries gaining popularity appeared in the autocomplete box along with normal query autocompletion.[107] The update received significant backlash, due to encouraging search queries unrelated to users' interests or intentions, prompting the company to issue an update with an opt-out option.[108] In September 2017, the Google Search app on iOS was updated to feature the same functionality.[109]

In December 2017, Google released "Google Go", an app designed to enable use of Google Search on physically smaller and lower-spec devices in multiple languages. A Google blog post about designing "India-first" products and features explains that it is "tailor-made for the millions of people in [India and Indonesia] coming online for the first time".[110]

Google Search consists of a series of localized websites. The largest of those, the google.com site, is the top most-visited website in the world.[111] Some of its features include a definition link for most searches including dictionary words, the number of results you got on your search, links to other searches (e.g. for words that Google believes to be misspelled, it provides a link to the search results using its proposed spelling), the ability to filter results to a date range,[112] and many more.

Google search accepts queries as normal text, as well as individual keywords.[113] It automatically corrects apparent misspellings by default (while offering to use the original spelling as a selectable alternative), and provides the same results regardless of capitalization.[113] For more customized results, one can use a wide variety of operators, including, but not limited to:[114][115]

OR or | – Search for webpages containing one of two similar queries, such as marathon OR raceAND – Search for webpages containing two similar queries, such as marathon AND runner- (minus sign) – Exclude a word or a phrase, so that "apple -tree" searches where word "tree" is not used"" – Force inclusion of a word or a phrase, such as "tallest building"* – Placeholder symbol allowing for any substitute words in the context of the query, such as "largest * in the world".. – Search within a range of numbers, such as "camera $50..$100"site: – Search within a specific website, such as "site:youtube.com"define: – Search for definitions for a word or phrase, such as "define:phrase"stocks: – See the stock price of investments, such as "stocks:googl"related: – Find web pages related to specific URL addresses, such as "related:www.wikipedia.org"cache: – Highlights the search-words within the cached pages, so that "cache:www.google.com xxx" shows cached content with word "xxx" highlighted.( ) – Group operators and searches, such as (marathon OR race) AND shoesfiletype: or ext: – Search for specific file types, such as filetype:gifbefore: – Search for before a specific date, such as spacex before:2020-08-11after: – Search for after a specific date, such as iphone after:2007-06-29@ – Search for a specific word on social media networks, such as "@twitter"Google also offers a Google Advanced Search page with a web interface to access the advanced features without needing to remember the special operators.[116]

Google applies query expansion to submitted search queries, using techniques to deliver results that it considers "smarter" than the query users actually submitted. This technique involves several steps, including:[117]

In 2008, Google started to give users autocompleted search suggestions in a list below the search bar while typing, originally with the approximate result count previewed for each listed search suggestion.[118]

Google's homepage includes a button labeled "I'm Feeling Lucky". This feature originally allowed users to type in their search query, click the button and be taken directly to the first result, bypassing the search results page. Clicking it while leaving the search box empty opens Google's archive of Doodles.[119] With the 2010 announcement of Google Instant, an automatic feature that immediately displays relevant results as users are typing in their query, the "I'm Feeling Lucky" button disappears, requiring that users opt-out of Instant results through search settings to keep using the "I'm Feeling Lucky" functionality.[120] In 2012, "I'm Feeling Lucky" was changed to serve as an advertisement for Google services; users hover their computer mouse over the button, it spins and shows an emotion ("I'm Feeling Puzzled" or "I'm Feeling Trendy", for instance), and, when clicked, takes users to a Google service related to that emotion.[121]

Tom Chavez of "Rapt", a firm helping to determine a website's advertising worth, estimated in 2007 that Google lost $110 million in revenue per year due to use of the button, which bypasses the advertisements found on the search results page.[122]

Besides the main text-based search-engine function of Google search, it also offers multiple quick, interactive features. These include, but are not limited to:[123][124][125]

During Google's developer conference, Google I/O, in May 2013, the company announced that users on Google Chrome and ChromeOS would be able to have the browser initiate an audio-based search by saying "OK Google", with no button presses required. After having the answer presented, users can follow up with additional, contextual questions; an example include initially asking "OK Google, will it be sunny in Santa Cruz this weekend?", hearing a spoken answer, and reply with "how far is it from here?"[126][127] An update to the Chrome browser with voice-search functionality rolled out a week later, though it required a button press on a microphone icon rather than "OK Google" voice activation.[128] Google released a browser extension for the Chrome browser, named with a "beta" tag for unfinished development, shortly thereafter.[129] In May 2014, the company officially added "OK Google" into the browser itself;[130] they removed it in October 2015, citing low usage, though the microphone icon for activation remained available.[131] In May 2016, 20% of search queries on mobile devices were done through voice.[132]

|

|

|

Type of site

|

Video search engine |

|---|---|

| Available in | Multilingual |

| Owner | |

| URL | www |

| Commercial | Yes |

| Registration | Recommended |

| Launched | August 20, 2012 |